Milvus User Guide

# Database

Milvus introduces a database layer above collections, providing a more efficient way to manage and organize your data while supporting multi-tenancy.

# What is a database

In Milvus, a database serves as a logical unit for organization and managing data. To enhance data security and achieve multi-tenancy, you can create multiple databases to logically isolate data for different applications or tenants. For example, you create a database to store the data of user A and another database for user B.

# Create database

You can use the Milvus RESTful API or SDKs to create data programmatically.

from pymilvus import MilvusClient

client = MilvusClient(

uri="http://localhost:19530",

token="root:Milvus"

)

client.create_database(

db_name="my_database_1"

)

2

3

4

5

6

7

8

9

10

You can also set properties for the database when you create it. The following example sets the number of replicas of the database.

client.create_database(

db_name="my_database_2",

properties={

"database.replica.number": 3

}

)

2

3

4

5

6

# View database

You can use the Milvus RESTful API or SDKs to list all existing databases and view their details.

# List all existing databases

client.list_databases()

# Output

# ['default', 'my_database_1', 'my_database_2']

# Check database details

client.describe_database(

db_name="default"

)

# Output

# {"name": "default"}

2

3

4

5

6

7

8

9

10

11

12

13

# Manage database properties

Each database has its own properties, you can set the properties of a database when you create the database as described in Create database or you can alter and drop the properties of any existing database.

The following table lists possible database properties.

| Property Name | Type | Property Description |

|---|---|---|

database.replica.number | integer | The number of replicas for the specified database. |

database.resource_groups | string | The names of the resource groups associated with the specified database in a comma-separated list. |

database.diskQuota.mb | integer | The maximum size of the disk space for the specified database, in megabytes(MB). |

database.max.collections | integer | The maximum number of collections allowed in the specified database. |

database.force.deny.writing | boolean | Whether to force the specified database to deny writing operations. |

database.force.deny.reading | boolean | Whether to force the specified database to deny reading operations. |

# Alter database properties

You can alter the properties of an existing database as follows. The following example limits the number of collections you can create in the database.

client.alter_database_properties(

db_name="my_database_1",

properties={

"database.max.collections": 10

}

)

2

3

4

5

6

# Drop database properties

You can also reset a database property by dropping it as follows. The following example removes the limit on the number of collections you can create in the database.

client.drop_database_properties(

db_name="my_database_1",

property_keys=[

"database.max.collections"

]

)

2

3

4

5

6

# Use database

You can switch from one database to another without disconnecting from Milvus.

NOTE

RESTful API does not support this operation.

client.use_database(

db_name="my_database_2"

)

2

3

# Drop database

Once a database is no longer needed, you can drop the database. Note that:

- Default databases cannot be dropped.

- Before dropping a database, you need to drop all collections in the database first.

You can use the Milvus RESTful API or SDKs to create data programmatically.

client.drop_database(

db_name="my_database_2"

)

2

3

# FAQ

# How do i manage permissions for a database?

Milvus uses Role-Based Access Control (RBAC) to manage permissions. You can create roles with specific privileges and assign them to users, thus controlling their access to different databases. For more details, refer to the RBAC documentation.

# Are there any quota limitations for a database?

Yes, Milvus allows you to set quota limitations for a database, such as the maximum number of collections. For a comprehensive list of limitations, please refer to the Milvus Limits documentation.

# Collections

# Collection Explained

On Milvus, you can create multiple collections to manage your data, and insert your data as entities into the collections. Collection and entity are similar to tables and records in relational databases. This page helps you to learn about the collection and related concepts.

# Collection

A collection is a two-dimensional table with fixed columns and variant rows. Each column represents a field, and each row represents an entity.

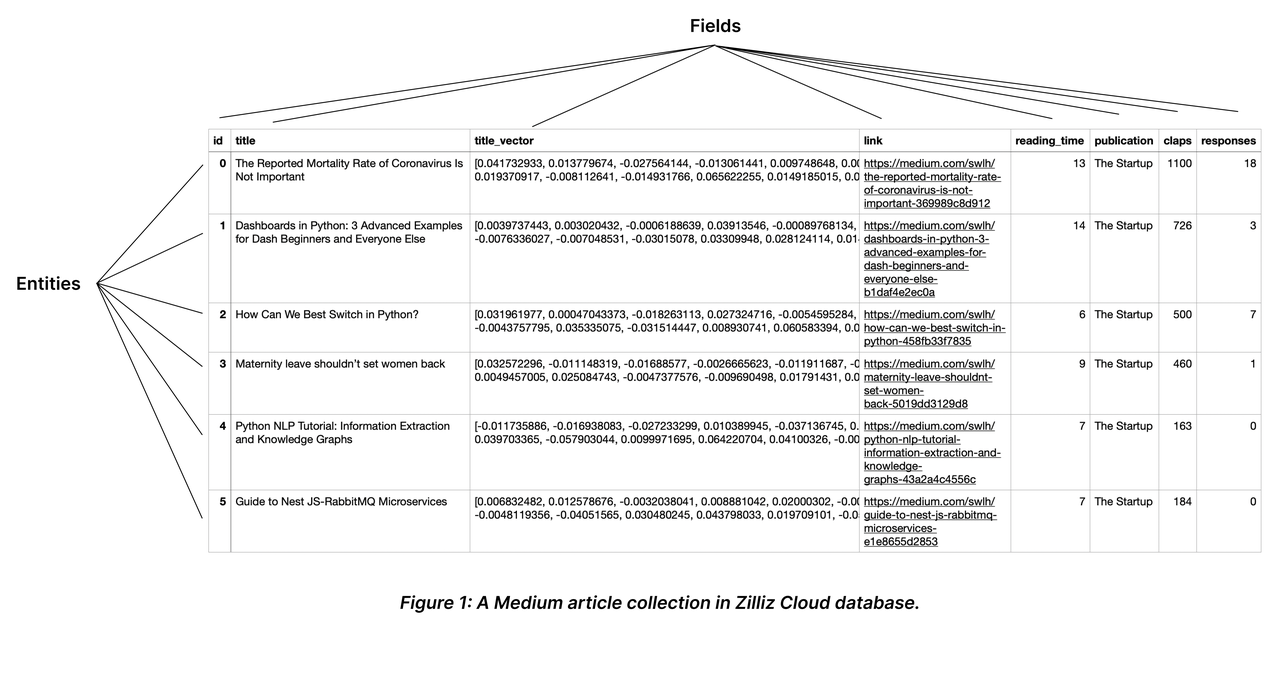

The following chart shows a collection with eight columns and six entities.

# Schema and Fields

When describing an object, we usually mention its attributes, such as size, weight, and position. You can use these attributes as fields in a collection. Each field has various constraining properties, such as the data type and the dimensionality of a vector field. You can form a collection schema by creating the fields and defining their order. For possible applicable data types, refer to Schema Explained.

You should include all schema-defined fields in the entities to insert. To make some of them optional, consider enabling dynamic field. For details, refer to Dynamic Field.

- Making them nullable or setting default values: For details on how to make a field nullable or set the default value, refer to Nullable & Default.

- Enabling dynamic field: For details on how to enable and use the dynamic field, refer to Dynamic Field.

# Primary key and AutoId

Similar to the primary field in a relational database, a collection has a primary field to distinguish an entity from others. Each value in the primary field is globally unique and corresponds to one specific entity.

As shown in the above chart, the field named id serves as the primary field, and the first ID 0 corresponds to an entity titled The Mortality Rate of Coronavirus is Not Important. There will not be any other entity that has the primary field of 0.

A primary field accepts only integers or strings. When inserting entities, you should include the primary field values by default. However, if you have enabled AutoId upon collection creation, Milvus will generate those values upon data insertion. In such a case, exclude the primary field values from the entities to be inserted.

For more information, please refer to Primary Field & AutoId.

# Index

Creating indexes on specific fields improves search efficiency. You are advised to create indexes for all the fields your service relies on, among which indexes on vector fields are mandatory.

# Entity

Entities are data records that share the same set of fields in a collection. The values in all fields of the same row comprise an entity.

You can insert as many entities as you need into a collection. However, as the number of entities mounts, the memory size it takes also increases, affecting search performance.

For more information, refer to Schema Explained.

# Load and Release

Loading a collection is the prerequisite to conducting similarity searches and queries in collections. When you load a collection, Milvus loads all index files and the raw data in each field into memory for fast response to searches and queries.

Searches and queries are memory-intensive operations. To save the cost, you are advised to release the collections that are currently not in use.

For more details, refer to Load & Release.

# Search and Query

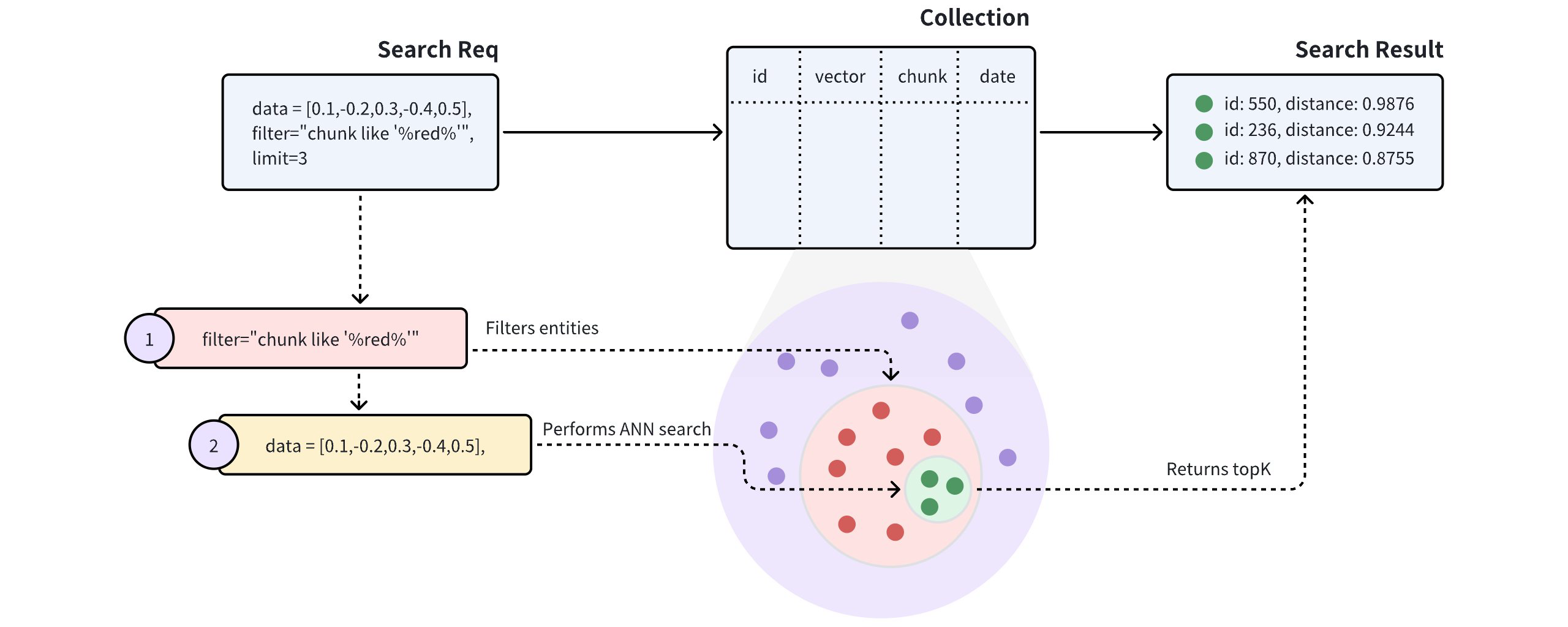

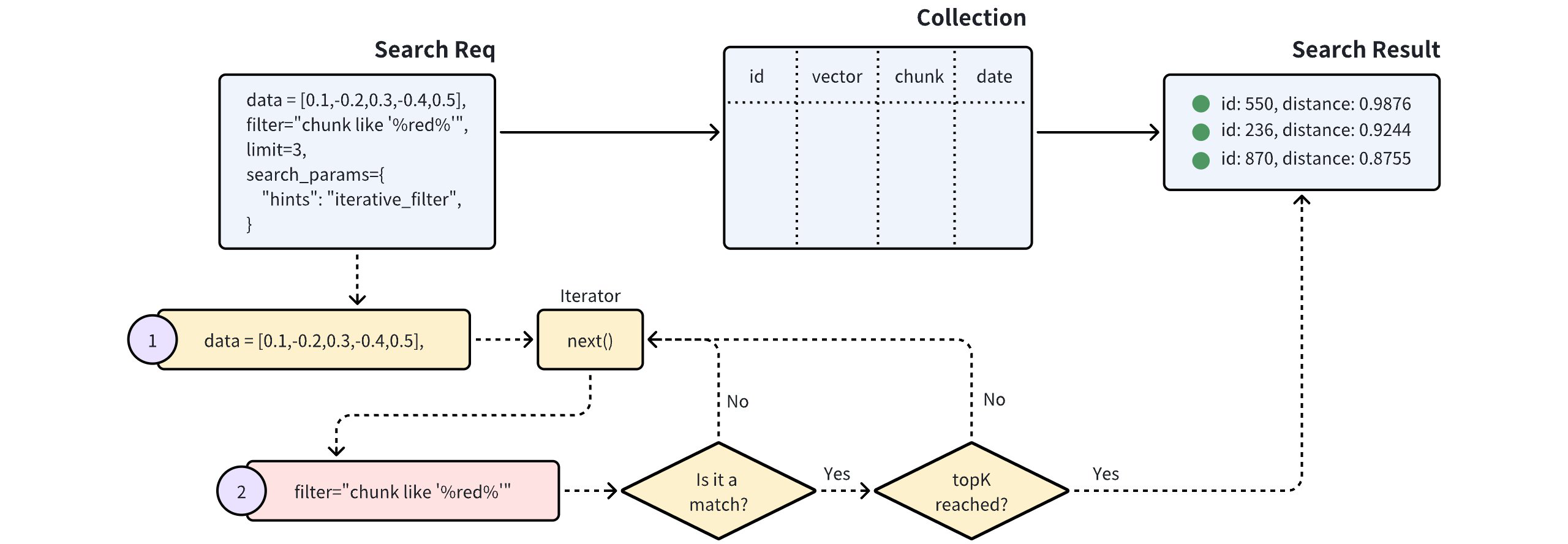

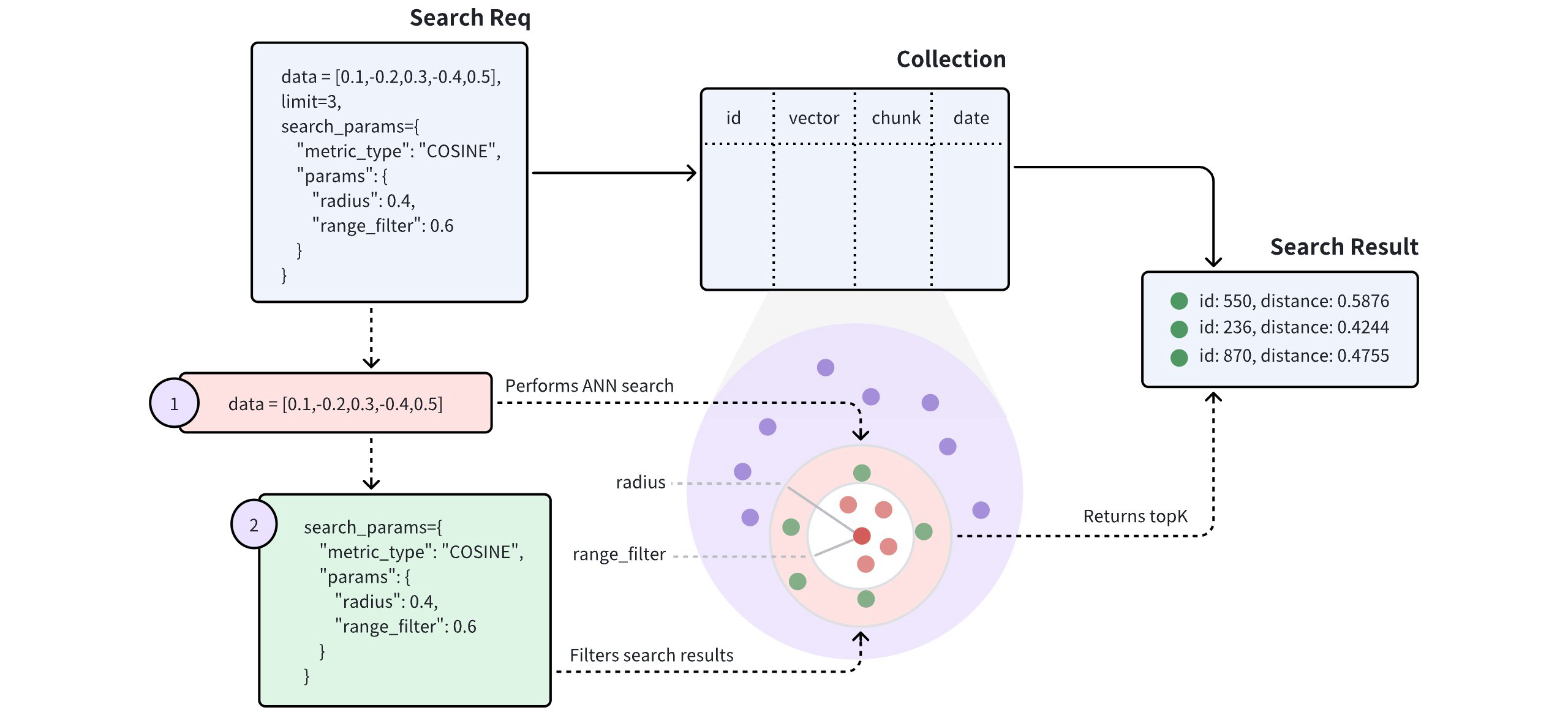

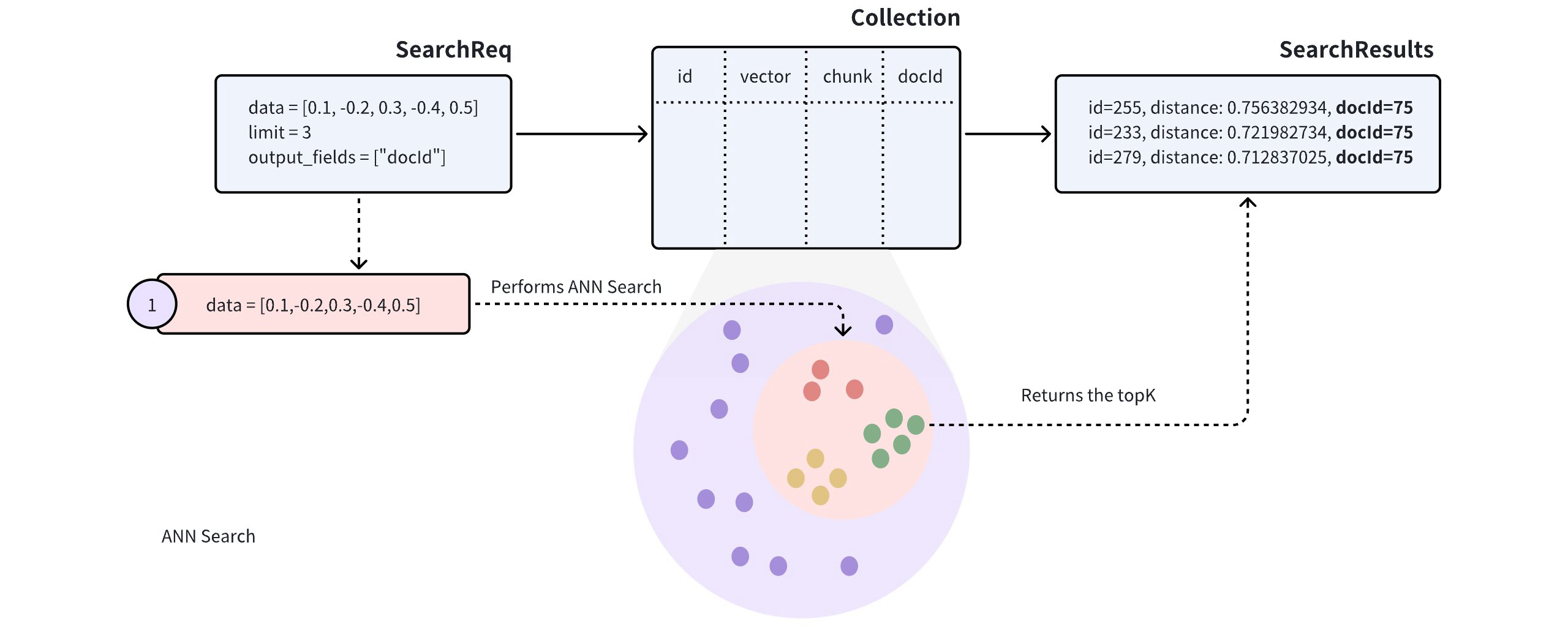

Once you create indexes and load the collection, you can start a similarity search by feeding one or several query vectors. For example, when receiving the vector representation of your query carried in a search request, Milvus uses the sepcified metric type to measure the similarity between the query vector and those in the target collection before returning those that are semantically similar to the query.

You can also include metadata filtering within searches and queries to improve the relevancy of the results. Note that, metadata filtering conditions are mandatory in queries but optional in searches.

For details on applicable metric types, refer to Metric Types.

For more information about searches and queries, refer to the articles in the Search & Rerank chapter, among which, basic features are:

- Basic ANN Search

- Filtered Search

- Range Search

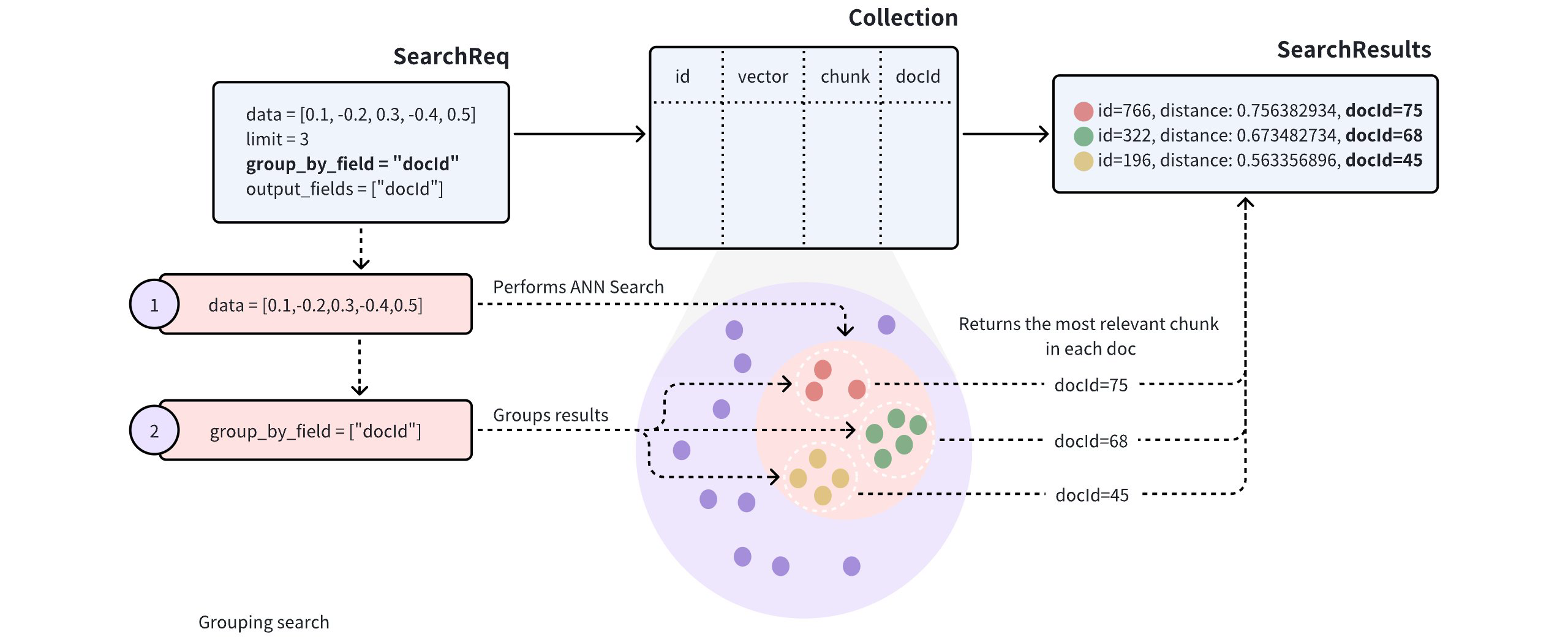

- Grouping Search

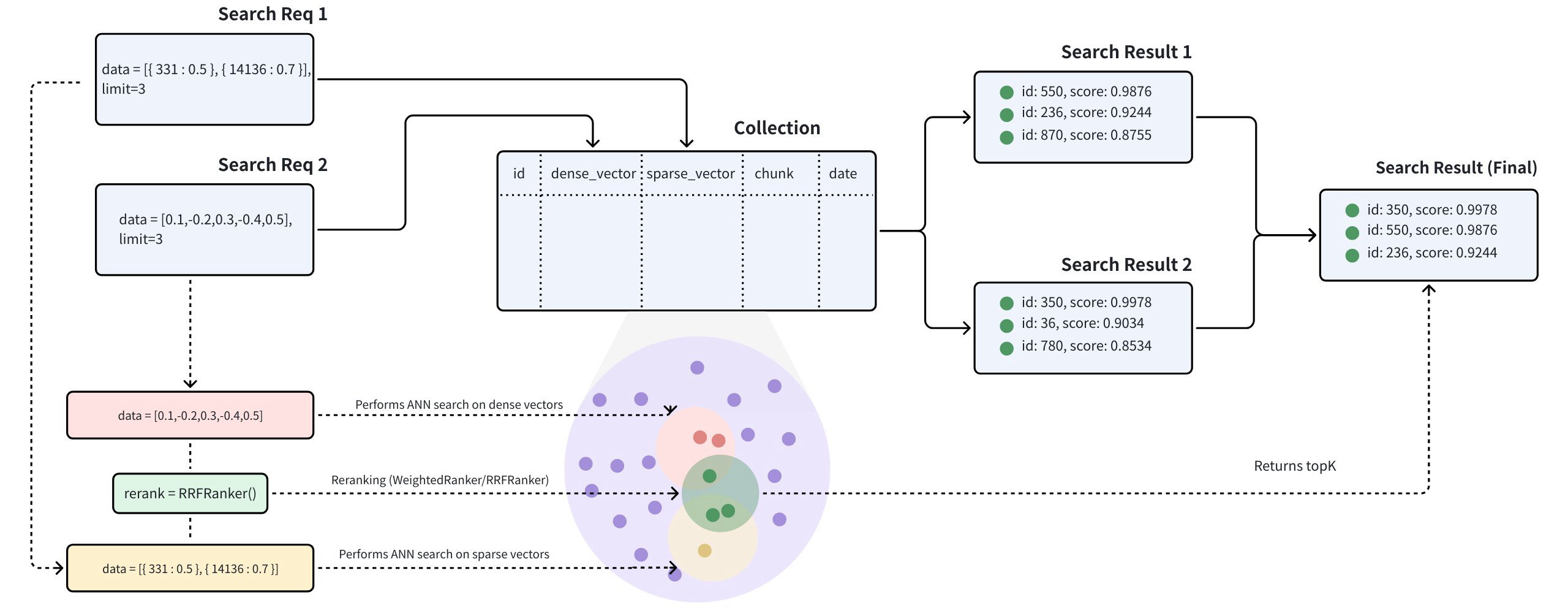

- Hybrid Search

- Search Iterator

- Query

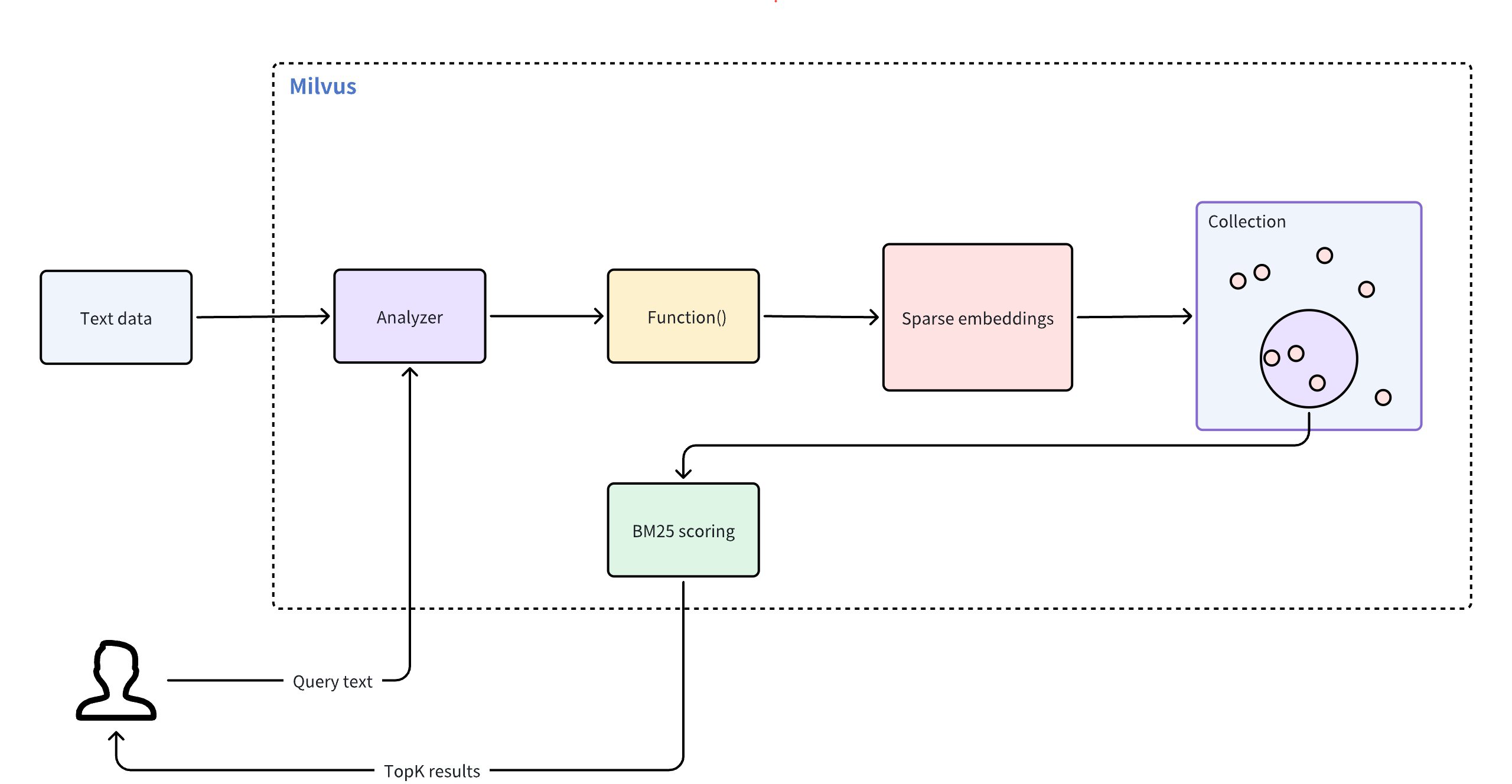

- Full Text Search

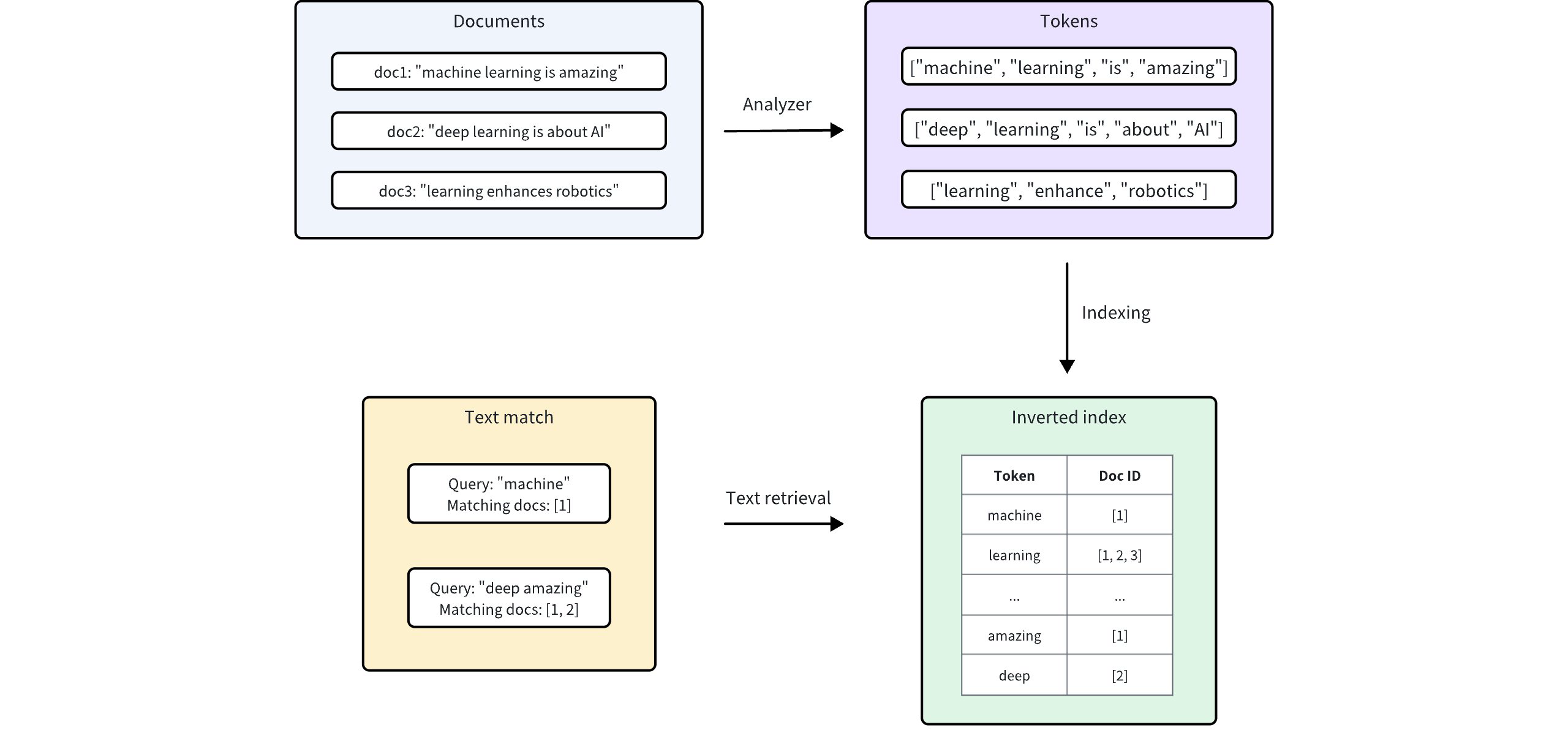

- Text Match

In addition, Milvus also provides enhancements to improve search performance and efficiency. They are disabled by default, and you can enable and use them according to your service requirements. They are

# Partition

Partitions are subsets of a collection which share the same field set with its parent collection, each containing a subset of entities.

By allocating entities into different partitions, you can create entity groups. You can conduct searches and queries in sepcific partitions to have Milvus ignore entities in other partitions, and improve search efficiency.

For details, refer to Manage Partitions.

# Shard

Shards are horizontal slices of a collection. Each shard corresponds to a data input channel. Every collection has a shard by default. You can set the appropriate number of shards when creating a collection based on the expected throughput and the volume of the data to insert into the collection.

For details on how to set the shard number, refer to Create Collection.

# Alias

You can create aliases for your collections. A collection can have serveral aliases, but collections cannot share an alias. Upon receiving a request against a collection, Milvus locates the collection based on the provided name. If the collection by the provided name does not exist, Milvus continues locating the provided name as an alias. You can use collection aliases to adapt your code to different scenarios.

For more details, refer to Manage Aliases.

# Function

You can set functions for Milvus to derive fields upon collection creation. For example, the full-text search function uses the user-defined function to derive a sparse vector field from a specific varchar field. For more information on full-text search, refer to Full Text Search.

# Consistency Level

Distributed database systems usually use the consistency level to define the data sameness across data nodes and replicas. You can set separate consistency levels when you create a collection or conduct similarity searches within the collection. The applicable consistency levels are Strong, Bounded Staleness, Session, and Eventually.

For details on these consistency levels, refer to Consistency Level.

# Create Collection

You can create a collection by defining its schema, index parameters, metric type, and whether to load it upon creation. This page introduces how to create a collection from scratch.

# Overview

A collection is a two-dimensional table with fixed columns and variant rows. Each column represents a field, and each row represents an entity. A schema is required to implement such structural data management. Every entity to insert has to meet the constraints defined in the schema.

You can determine every aspect of a collection, including its schema, index parameters, metric type, and whether to load it upon creation to ensure that the collection fully meets your requirements.

To create a collection, you need to:

# Create Schema

A schema defines the data structure of a collection. When creating a collection, you need to design the schema based on your requirements. For details, refer to Schema Explained.

The following code snippets create a schema with the enabled dynamic field and three mandatory fields named my_id, my_vector, and my_varchar.

NOTE

You can set default values for any scalar field and make it nullable. For details, refer to Nullable & Default.

# 3. Create a collection in customized setup mode

from pymilvus import MilvusClient, DataType

client = MilvusClient(

uri="http://localhost:19530",

token="root:Milvus"

)

# 3.1 Create schema

schema = MilvusClient.create_schema(

auto_id=False,

enable_dynamic_field=True

)

# 3.2 Add fields to schema

schema.add_field(field_name="my_id", datatype=DataType.INT64, is_primary=True)

schema.add_field(field_name="my_vector", datatype=DataType.FLOAT_VECTOR, dim=5)

schema.add_field(field_name="my_varchar", datatype=DataType.VARCHAR, max_length=512)

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

# (Optional) Set Index Parameters

Creating an index on a specific field accelerates the search against this field. An index records the order of entities within a collection. As shown in the following code snippets, you can use metric_type and index_type to select appropriate ways for Milvus to index a field and measure similarities between vector embeddings.

On Milvus, you can use AUTOINDEX as the index type for all vector field, and one of COSINE, L2 and IP as the metric type based on your needs.

As demonstrated in the above code snippets, you need to set both the index type and metric type for vector fields and only the index type for the scalar fields. Indexes are mandatory for vector fields, and you are advised to create indexes on scalar fields frequently used in filtering conditions.

For details, refer to Index Vector Fields and Index Scalar Fields.

# 3.3 Prepare index parameters

index_params = client.prepare_index_params()

# 3.4 Add indexes

index_params.add_index(

field_name="my_id",

index_type="AUTOINDEX"

)

index_params.add_index(

field_name="my_vector",

index_type="AUTOINDEX",

metric_type="COSINE"

)

2

3

4

5

6

7

8

9

10

11

12

13

14

# Create a Collection

If you have created a collection with index parameters, Milvus automatically loads the collection upon its creation. In this case, all fields mentioned in the index parameters are indexed.

The following code snippets demonstrate how to create the collection with index parameters and check its load status.

# 3.5 Create a collection with the index loaded simultaneously

client.create_collection(

collection_name="customized_setup_1",

schema=schema,

index_params=index_params

)

res = client.get_load_state(

collection_name="customized_setup_1"

)

print(res)

# Output

#

# {

# "state": "<LoadState: Loaded>"

# }

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

You can also create a collection without any index parameters and add them afterward. In this case, Milvus does not load the collection upon its creation.

The following code snippet demonstrates how to create a collection without an index, and the load status of the collection remains uploaded upon creation.

# 3.6 Create a collection and index it separately

client.create_collection(

collection_name="customized_setup_2",

schema=schema,

)

res = client.get_load_state(

collection_name="customized_setup_2"

)

print(res)

# Output

#

# {

# "state": "<LoadState: Notload>"

# }

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

# Set Collection Properties

You can set properties for the collection to create to make it fit into your service. The applicable properties are as follows.

# Set Shard Number

Shards are horizontal slices of a collection, and each shard corresponds to a data input channel. By default, every collection has one shard. You can specify the number of shards when creating a collection to better suit your data volume and workload.

As a general guideline, consider the following when setting the number of shards:

- Data size: A common practice is to have one shard for every 200 million entities. You can also estimate based on the total data size, for example, adding one shard for every 100 GB of data you plan to insert.

- Stream node utilization: If your Milvus instance has multiple stream nodes, using multiple shards is recommended. This ensure that the data insertion workload is distributed across all available stream nodes, preventing some from being idle while others are overloaded.

The following code snippet demonstrates how to set the shard number when you create a collection.

# With shard number

client.create_collection(

collection_name="customized_setup_3",

schema=schema,

num_shards=1

)

2

3

4

5

6

# Enable mmap

Milvus enables mmap on all collection by default, allowing Milvus to map raw field data int memory instead of fully loading them. This reduces memory footprints and increases collection capacity. For details on mmap, refer to Use mmap.

# With mmap

client.create_collection(

collection_name="customized_setup_4",

schema=schema,

enable_mmap=False

)

2

3

4

5

6

# Set Collection TTL

If the data in a collection needs to be dropped for a specific period, consider setting its Time-To-Live(TTL) in seconds. Once the TTL times out, Milvus deletes entities in the collection. The deletion is asynchronous, indicating that searches and queries are still possible before the deletion is complete.

The following code snippet sets the TTL to one day (86400 seconds). You are advised to set the TTL to a couple of days at minimum.

# With TTL

client.create_collection(

collection_name="customized_setup_5",

schema=schema,

properties={

"collection.ttl.seconds": 86400

}

)

2

3

4

5

6

7

8

# Set Consistency Level

When creating a collection, you can set the consistency level for searches and queries in the collection. You can also change the consistency level of the collection during a specific search or query.

# With consistency level

client.create_collection(

collection_name="customized_setup_6",

schema=schema,

consistency_level="Bounded",

)

2

3

4

5

6

For more on consistency levels, see Consistency Level.

# Enable Dynamic Field

The dynamic field in a collection is a reserved JavaScript Object Notation (JSON) field named $meta. Once you have enabled this field, Milvus saves all non-schema-defined fields carried in each entity and their values as key-value pairs in the reserved field.

For details on how to use the dynamic field, refer to Dynamic Field.

# View Collections

You can obtain the name list of all the collections in the currently dataase, and check the details of a specific collection.

# List Collections

The following example demonstrates how to abtain the name list of all collections in the currently connected database.

from pymilvus import MilvusClient, DataType

client = MilvusClient(

uri="http://localhost:19530",

token="root:Milvus"

)

res = client.list_collections()

print(res)

2

3

4

5

6

7

8

9

10

If you have already created a collection named quick_setup, the result of the above example should be similar to the following.

["quick_setup"]

# Describe Collection

You can also obtain the details of a specific collection. The following example assumes that you have already created a collection named quick_setup.

res = client.describe_collection(

collection_name="quick_setup"

)

print(res)

2

3

4

5

The result of the above example should be similar to the following.

{

'collection_name': 'quick_setup',

'auto_id': False,

'num_shards': 1,

'description': '',

'fields': [

{

'field_id': 100,

'name': 'id',

'description': '',

'type': <DataType.INT64: 5>,

'params': {},

'is_primary': True

},

{

'field_id': 101,

'name': 'vector',

'description': '',

'type': <DataType.FLOAT_VECTOR: 101>,

'params': {'dim': 768}

}

],

'functions': [],

'aliases': [],

'collection_id': 456909630285026300,

'consistency_level': 2,

'properties': {},

'num_partitions': 1,

'enable_dynamic_field': True

}

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

# Modify Collection

You can rename a collection or change its settings. This page focuses on how to modify a collection.

# Rename Collection

You can rename a collection as follows.

from pymilvus import MilvusClient

client = MilvusClient(

uri="http://localhost:19530",

token="root:Milvus"

)

client.rename_collection(

old_name="my_collection",

new_name="my_new_collection"

)

2

3

4

5

6

7

8

9

10

11

# Set Collection Properties

The following code snippet demonstrates how to set collection TTL.

from pymilvus import MilvusClient

client.alter_collection_properties(

collection_name="my_collection",

properties={"collection.ttl.seconds": 60}

)

2

3

4

5

6

The applicable collection properties are as follows:

| Property | When to Use |

|---|---|

collection.ttl.seconds | If the data of a collection needs to be deleted after a specific period, consider setting its Time-To-Live(TTL) in seconds. Once the TTL times out, Milvus deletes all entities from the collection. THe deletion is asynchronous, indicating that searches and queries are still possible before the deletion is complete. For details, refer to Set Collection TTL. |

mmap.enabled | Memory mapping (Mmap) enables direct memory access to large files on disk, allowing Milvus to store indexes and data in both memory and hard drives. This approach helps optimize data placement policy based on access frequency, expanding storage capacity for collections without impacting search performance. For details, refer to Use mmap |

partitionkey.isolation | With Partition Key Isolation enabled, Milvus groups entities based on the Partition Key value and creates a separate index for each of these groups. Upon receiving a search request, Milvus locates the index based on the Partition Key value specified in the filtering condition and restricts the search scope within the entities included in the index, thus avoiding scanning irrelevant entities durint the search geatly enhancing the search performance. For details, refer to Use Partition Key Isolation. |

# Drop Collection Properties

You can also reset a collection property by dropping it as follows.

client.drop_collection_properties(

collection_name="my_collection",

property_keys=[

"collection.ttl.seconds"

]

)

2

3

4

5

6

# Load & Release

Loading a collection is the prerequisite to conducting similarity searches and queries in collections. This page focuses on the procedures for loading and releasing a collection.

# Load Collection

When you load a collection, Milvus loads the index files and the raw data of all fields into memory for rapid response to searches and queries. Entities inserted after a collection load are automatically indexed and loaded.

The following code snippets demonstrate how to load a collection.

from pymilvus import MilvusClient

client = MilvusClient(

uri="http://localhost:19530",

token="root:Milvus"

)

# 7. Load the collection

client.load_collection(

collection_name="my_collection"

)

res = client.get_load_state(

collection_name="my_collection"

)

print(res)

# Output

#

# {

# "state": "<LoadState: Loaded>"

# }

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

# Load Specific Fields

Milvus can load only the fields involved in searches and queries, reducing memory usage and improving search performance.

NOTE

Partial collection loading is currently in beta and not recommanded for production use.

The following code snippet assumes that you have created a collection named my_collection, and there are two fields named my_id and my_vector in the collection.

client.load_collection(

collection_name="my_collection",

load_fields=["my_id", "my_vector"], # Load only the specified fields

skip_load_dynamic_field=True # Skip loading the dynamic field

)

res = client.get_load_state(

collection_name="my_collection"

)

print(res)

# Output

# {

# "state": "<LoadState: Loaded>"

# }

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

If you choose to load specific fields, it is worth noting that only the fields included in load_fields can be used as filters and output fields in searches and queries. You should always include the names of the primary field and at least one vector field in load_fields.

You can also use skip_load_dynamic_field to determine whether to load the dynamic field. The dynamic field is a reserved JSON field named $meta and saves all non-schema-defined fields and their values in key-value pairs. When loading the dynamic field, all keys in the fields are loaded and availabe for filtering and output. If all keys in the dynamic field are not involved in metadata filtering and output, set skip_load_dynamic_field to True.

To load more fields after the collection load, you need to release the collection first to avoid possible errors prompted because of index changes.

# Release Collection

Searches and queries are memory-intensive operations. To save the cost, you are advised to release the collections that are currently not in use.

The following code snippet demonstrates how to release a collection.

# 8. Release the collection

client.release_collection(

collection_name="my_collection"

)

res = client.get_load_state(

collection_name="my_collection"

)

print(res)

# Output

# {

# "state": "<LoadState: Notload>"

# }

2

3

4

5

6

7

8

9

10

11

12

13

14

15

# Set Collection TTL

Once data is inserted into a collection, it remains there by default. However, in some scenarios, you may want to remove or clean up data after a certain period. In such cases, you can configure the collection's Time-to-Live (TTL) property so that Milvus automatically deletes the data once the TTL expires.

# Overview

Time-to-Live (TTL) is commonly used in databases for scenarios where data should only remain valid or accessible for a certain period after any insertion or modification. Then, the data can be automatically removed.

For instance, if you ingest data daily but only need to retain records for 14 days, you can configure Milvus to automatically remove any data older than that by setting the collection's TTL to 14 * 14 * 3600 = 1209600 seconds. This ensure that only the most of recent 14 days' worth of data remain in the collection.

NOTE

Expired entities will not appear in any search or query results. However, they may stay in the storage until the subsequent data compaction, which should be carried out within the next 24 hours.

You can control when to trigger the data compaction by setting the dataCoord.compaction.expiry.tolerance configuraiton item in your Milvus configuration file.

This configuration item defaults to -1, indicating that the existing data compaction interval applies. However, when you can change its value to a positive integer, like 12, data compaction will be triggered the specified number of hours after any entities become expired.

The TTL property in a Milvus collection is specified as an integer in seconds. Once set, any data that surpasses its TTL will be automatically deleted from the collection.

Because the deletion process is asynchronous, data might not be removed from search results exactly once the specified TTL has elapsed. Instead, there may be a delay, as the removal depends on the garbage collection (GC) and compaction processes, which occur at non-deterministic intervals.

# Set TTL

You can set the TTL property when you:

# Set TTL when creating a collection

The following code snippet demonstrates how to set the TTL property when you create a collection.

from pymilvus import MilvusClient

# With TTL

client.create_collection(

collection_name="my_collection",

schema=schema,

properties={

"collection.ttl.seconds": 1209600

}

)

2

3

4

5

6

7

8

9

10

# Set TTL for an existing collection

The following code snippet demonstrates how to alter the TTL property in an existing collection.

client.alter_collection_properties(

collection_name="my_collection",

properties={"collection.ttl.seconds": 1209600}

)

2

3

4

# Drop TTL setting

If you decide to keep the data in a collection indefinitely, you can simply drop the TTL setting from that collection.

client.drop_collection_properties(

collection_name="my_collection",

property_keys=["collection.ttl.seconds"]

)

2

3

4

# Set Consistency Level

As a distributed vector database, Milvus offers multiple levels of consistency to ensure that each node or replica can access the same data during read and write operations. Currenty, the supported levels of consistency include Strong, Bounded, Eventually, and Session, with Bounded being the default level of consistency used.

# Overview

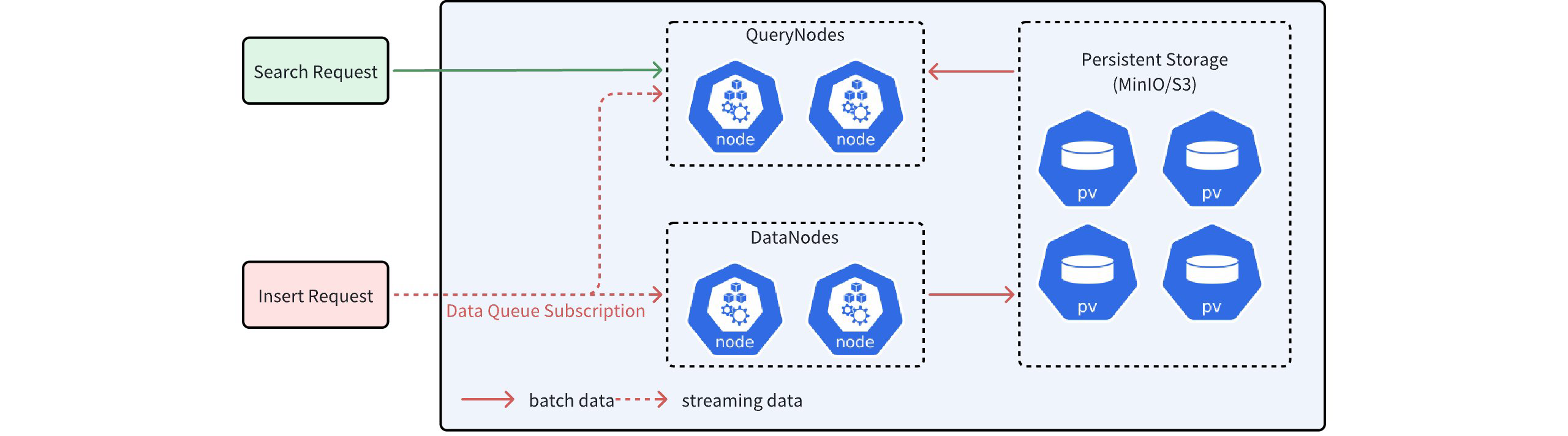

Milvus is a system that separates storage and computation. In this system, DataNodes are responsible for the persistence of data and ultimately store it in distributed object storage such as MinIO/S3. QueryNode handle computational tasks like Search. These tasks involve processing both batch data and streaming data. Simply put, batch data can be understood as data that has already been stored in object storage while streaming data refers to data that has not yet been stored in object storage. Due to network latency, QueryNodes often do not hold the most recent streaming data. Without additional safeguards, performing Search directly on streaming data may result in the loss of many uncommitted data points, affecting the accuracy of search results.

As shown in the figure above, QueryNodes can receive both streaming data and batch data simultaneously after receiving a Search request. However, due to network latency, the streaming data obtained by QueryNodes may be incomplete.

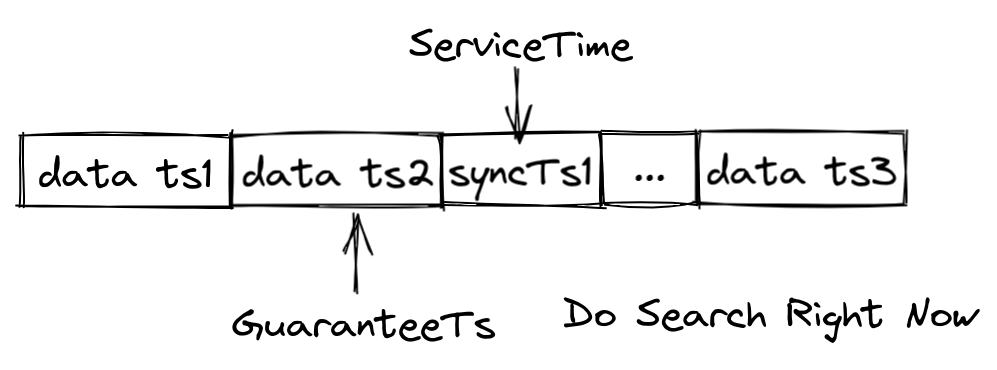

To address this issue, Milvus timestamps each record in the data queue and continuously inserts synchronization timestamps into the data queue. Whenever a synchronization timestamp (syncTs) is received, QueryNodes sets it as the ServiceTime, meaning that QueryNodes can see all data prior to that Service Time. Based on the ServiceTime, Milvus can provide guarantee timestamps (GuaranteeTs) to meet different user requirements for consistency and availability. Users can inform QueryNodes of the need to include data prior to a specified point in time in the search scope by specifying GuaranteeTs in their requests.

As shown in the figure above, if GuaranteeTs is less than ServiceTime, it means that all data before the specified time point has been fully written to disk, allowing QueryNodes to immediately perform the Search operation. When GuaranteeTs is greater than ServiceTime, QueryNodes must wait until ServiceTime exceeds GuaranteeTs before they can execute the Search operation.

Users need to make a trade-off between query accuracy and query latency. If users have high consistency requirements and are not sensitive to query latency, they can set GuaranteeTs to a value as large as possible; if users wish to receive search results quickly and are more tolerant of query accuracy, then GuaranteeTs can be set to a smaller value.

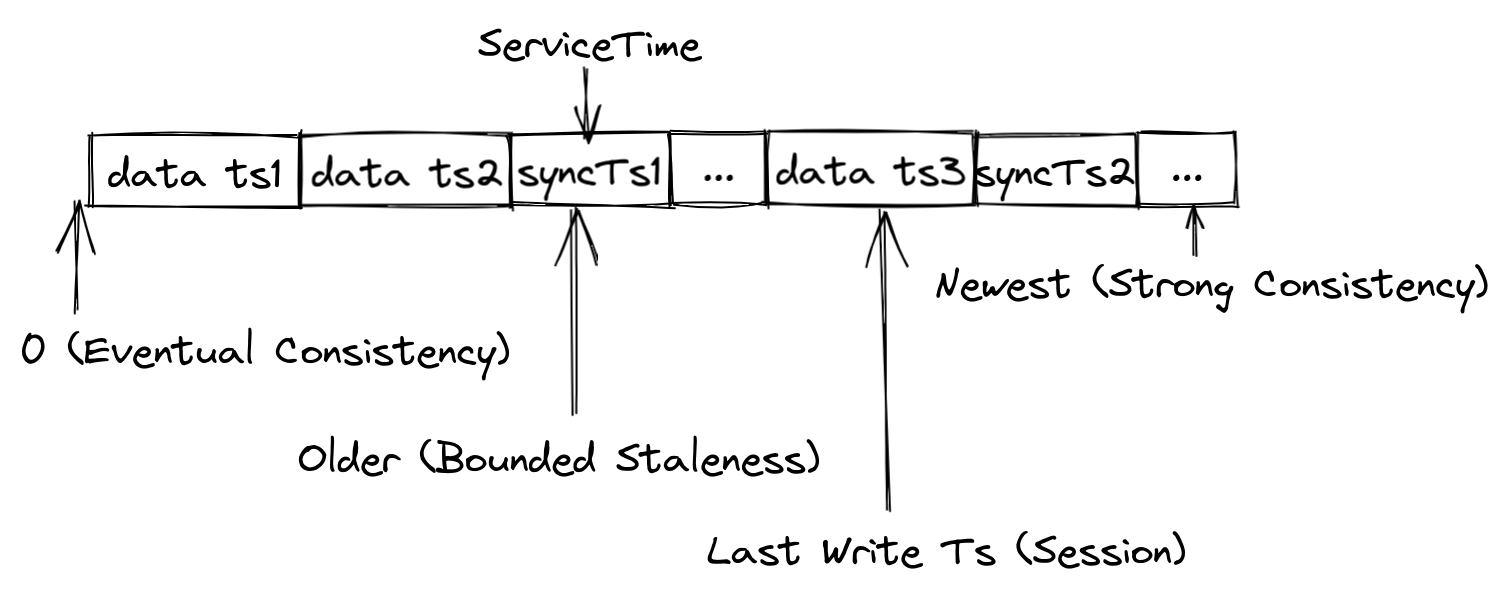

Milvus provides four types of consistency levels with different GuaranteeTs.

- Strong: The latest timestamp is used as the GuaranteeTs, and QueryNodes have to wait until the ServiceTime meets the GuaranteeTs before executing Search requests.

- Eventual: The GuaranteeTs is set to an extremely small value, such as 1, to avoid consistency checks so that QueryNodes can immediately execute Search requests upon all batch data.

- Bounded Staleness: The GuranteeTs is set to a time point earlier than the latest timestamp to make QueryNodes to perform searches with a tolerance of certain data loss.

- Session: The latest time point at which the client inserts data is used as the GuaranteeTs so that QueryNodes can perform searches upon all the data inserted by the client.

Milvus uses Bounded Staleness as the default consistency leve. If the GuaranteeTs is left unspecified, the latest ServiceTime is used as the GuaranteeTs.

# Set Consistency Level

You can set different consistency levels when you create a collection as well as perform searches and queries.

# Set Consistency Level upon Creating Collection

When creating a collection, you can set the consistency level for the searches and queries within the collection. The following code example sets the consistency level to Strong.

client.create_collection(

collection_name="my_collection",

schema=schema,

consistency_level="Bounded"

)

2

3

4

5

Possible values for the consistency_level parameter are Strong, Bounded, Eventually, and Session.

# Set Consistency Level in Search

You can always change the consistency level for a specific search. The following code example sets the consistency level back to the Bounded. The change applies only to the current search request.

res = client.search(

collection_name="my_collection",

data=[query_vector],

limit=3,

search_params={"metric_type": "IP"},

consistency_level="Bounded",

)

2

3

4

5

6

7

This parameter is also available in hybrid searches and the search iterator. Possible values for the consistency_level parameter are Strong, Bounded, Eventually, Session.

# Set Consistency Level in Query

You can always change the consistency level for a specific search. The following code example sets the consistency level to the Eventually. THe setting applies only to the current query request.

res = client.query(

collection_name="my_collection",

filter="color like \"red%\%",

output_fields=["vector", "color"],

limit=3,

consistency_level="Eventually",

)

2

3

4

5

6

7

This parameter is also available in query iterator. Possible values for the consistency_level parameter are Strong, Bounded, Eventually, Session.

# Manage Partitions

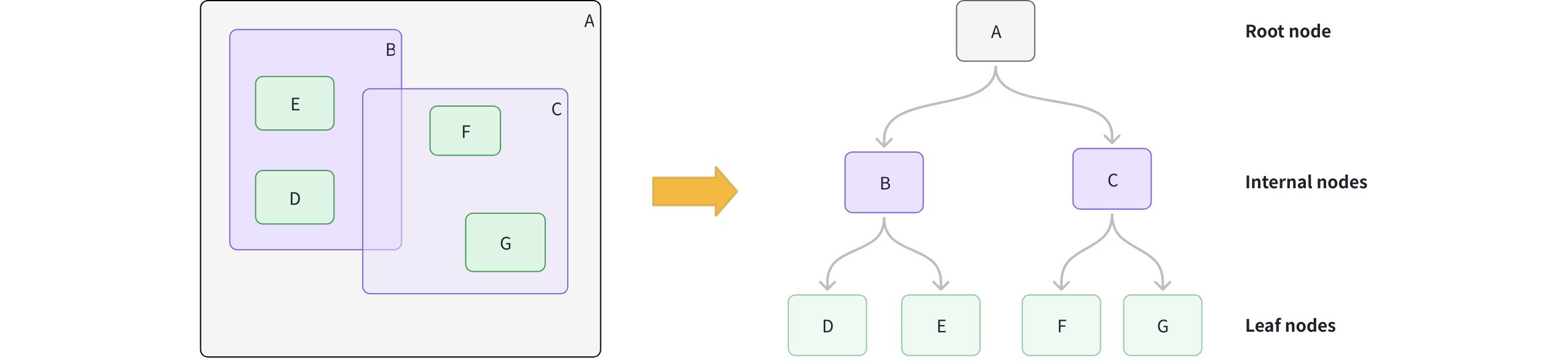

A partition is a subset of a collection. Each partition shares the same data structure with its parent collection but contains only a subset of the data in the collection. This page helps you understand how to manage partitions.

# Overview

When creating a collection, Milvus also creates a partition named _default in the collection. If you are not going to add any other partitions, all entities inserted into the collection go into the default partition, and all searches and queries are also carried out within the default partition.

You can add more partitions and insert entities into them based on certain criteria. Then you can restrict your searches and queries within certain partitions, improving search performance.

A collection can have a maximum of 1024 partitions.

NOTE

The Partition Key feature is a search optimization based on partitions and allows Milvus to distribute entities into different partitions based on the values in a specific scalar field. This feature helps implement partition-oriented multi-tenancy and improves search performance.

This feature will not be discussed on this page. To find more, refer to Use Partition Key.

# List Partitions

When creating a collection, Milvus also creates a partition named _default in the collection. You can list the partitions in a collection as follows.

from pymilvus import MilvusClient

client = MilvusClient(

uri="http://localhost:19530",

token="root:Milvus"

)

res = client.list_partitions(

collection_name="my_collection"

)

print(res)

# Output

#

# ["_default"]

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

# Create Partition

You can add more partitions to the collection and insert entities into these partitions based on certain criteria.

client.create_partition(

collection_name="my_collection",

partition_name="partitionA"

)

res = client.list_partitions(

collection_name="my_collection"

)

print(res)

# Output

#

# ["_default", "partitionA"]

2

3

4

5

6

7

8

9

10

11

12

13

14

# Check for a Specific Partition

The following code snippets demonstrate how to check whether a partition exists in a specific collection.

res = client.has_partition(

collection_name="my_collection",

partition_name="partitionA"

)

print(res)

# Output

#

# True

2

3

4

5

6

7

8

9

10

# Load and Release Partitions

You can separately load or release one or certain partitions.

# Load Partitions

You can separately load specific partitions in a collection. It is worth noting that load status of a collection stays unloaded if there is an unloaded partition in the collection.

client.load_partitions(

collection_name="my_collection",

partition_names=["partitionA"]

)

res = client.get_load_state(

collection_name="my_collection",

partition_name="partitionA"

)

print(res)

# Output

#

# {

# "state": "<LoadState: Loaded>"

# }

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

# Release Partitions

You can also release specific partitions.

client.release_partitions(

collection_name="my_collection",

partition_names=["partitionA"]

)

res = client.get_load_state(

collection_name="my_collection",

partition_name="partitionA"

)

print(res)

# Output

#

# {

# "state": "<LoadState: NotLoaded>"

# }

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

# Data Operations Within Partitions

# Insert and Delete Entities

You can perform insert, upsert, and delete operations in specific operations. For details, refer to

# Search and Query

You can conduct searches and queries within specific partitions. For details, refer to

# Drop Partition

You can drop partitions that are no longer needed. Before dropping a partition, ensure that the partition has been released.

client.release_partitions(

collection_name="my_collection",

partition_names=["partitionA"]

)

client.drop_partition(

collection_name="my_collection",

partition_name="partitionA"

)

res = client.list_partitions(

collection_name="my_collection"

)

print(res)

# ["_default"]

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

# Manage Aliases

In Milvus, an alias is a secondary, mutable name for a collection. Using aliases provides a layer of abstraction that allows you to dynamically switch between collections without modifying your application code. This is particularly useful in production environments for seamless data updates, A/B testing, and other operational tasks.

This page demonstrates how to create, list, reassign, and drop collection aliases.

# Why Use an Alias?

The primary benefit of using an alias is to decouple your client application from a specific, physical collection name.

Imagine you have a live application that queries a collection named prod_data. When you need to update the underlying data, you can perform the update without any service interruption. The workflow would be:

- Create a New Collection: Create a new collection, for instance,

prod_data_v2. - Prepare Data: Load and index the new data in

prod_data_v2. - Switch the Alias: Once the new collection is ready for service, atomically reassign the alias

prod_datafrom the old collection toprod_data_v2.

Your application continues to send requests to the alias prod_data, experiencing zero downtime. This mechanism enables seamless updates and simplifies operations like blue-green deployments for your vector search service.

Key Properties of Aliases:

- A collection can have multiple aliases.

- An alias can only point to one collection at a time.

- When processing a request, Milvus first checks if a collection with the provided name exists. If not, it then checks if the name is an alias for a collection.

# Create Alias

The following code snippet demonstrates how to create an alias for a collection.

from pymilvus import MilvusClient

client = MilvusClient(

uri="http://localhost:19530",

token="root:Milvus"

)

# 9. Manage aliases

# 9.1. Create aliases

client.create_alias(

collection_name="my_collection_1",

alias="bob"

)

client.create_alias(

collection_name="my_collection_1",

alias="alice"

)

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

# List Aliases

The following code snippet demonstrates the procedure to list the aliases allocated to a specific collection.

# 9.2. List aliases

res = client.list_aliases(

collection_name="my_collection_1"

)

print(res)

# Output

#

# {

# "aliases": [

# "bob",

# "alice"

# ],

# "collection_name": "my_collection_1",

# "db_name": "default"

# }

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

# Describe Alias

The following code snippet describes a specific alias in detail, including the name of the collection to which it has been allocated.

# 9.3. Describe aliases

res = client.describe_alias(

alias="bob"

)

print(res)

# Output

#

# {

# "alias": "bob",

# "collection_name": "my_collection_1",

# "db_name": "default"

# }

2

3

4

5

6

7

8

9

10

11

12

13

14

# Alter Alias

You can reallocate the alias already allocated to a specific collection to another.

# 9.4 Reassign aliases to other collections

client.alter_alias(

collection_name="my_collection_2",

alias="alice"

)

res = client.list_aliases(

collection_name="my_collection_2"

)

print(res)

# Output

#

# {

# "aliases": [

# "alice"

# ],

# "collection_name": "my_collection_2",

# "db_name": "default"

# }

res = client.list_aliases(

collection_name="my_collection_1"

)

print(res)

# Output

#

# {

# "aliases": [

# "bob"

# ],

# "collection_name": "my_collection_1",

# "db_name": "default"

# }

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

# Drop Alias

The following code snippet demonstrates the procedure to drop an alias.

# 9.5 Drop aliases

client.drop_alias(

alias="bob"

)

client.drop_alias(

alias="alice"

)

2

3

4

5

6

7

8

# Drop Collection

You can drop a collection if it is no longer needed.

# Examples

The following code snippets assume that you have a collection named my_collection.

from pymilvus import MilvusClient

client = MilvusClient(

uri="http://localhost:19530",

token="root:Milvus"

)

client.drop_collection(

collection_name="my_collection"

)

2

3

4

5

6

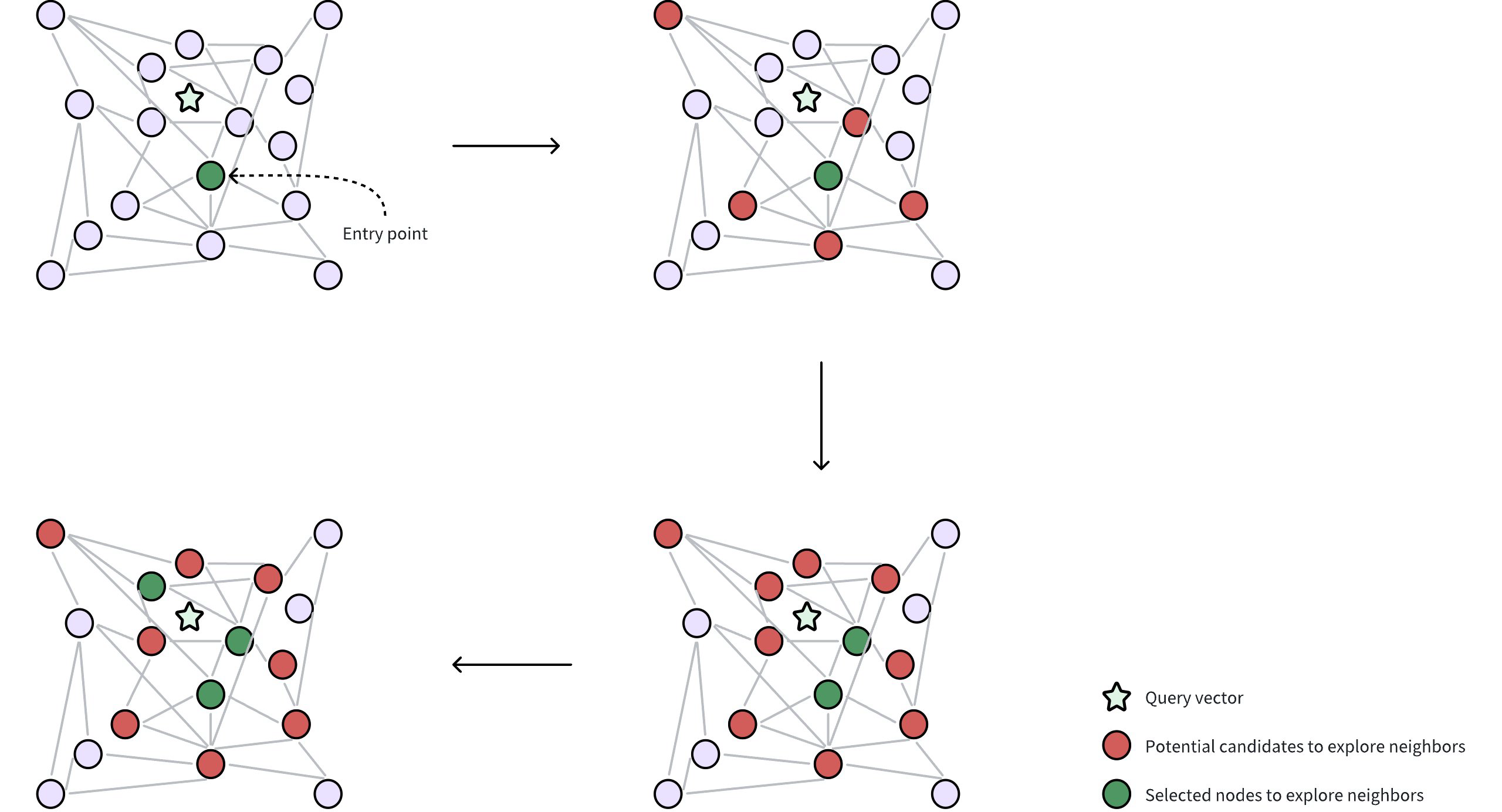

7

8

9

10

# Schema & Data Fields

# Schema Explained

A schema defines the data structure of a collection. Before creating a collection, you need to work out a design of its schema. This page helps you understand the collection schema and design an example schema on your own.

# Overview

On Zilliz Cloud, a collection schema assembles a table in a relational database, which defines how Zilliz Cloud organizes data in the collection.

A well-designed schema is essential as it abstracts the data model and decides if you can achieve the business objectives through a search. Furthermore, since every row of data inserted into the collection must follow the schema, it helps maintain data consistency and long-term quality. From a technical perspective, a well-defined schema leads to well-organized column data storage and a cleaner index structure, boosting search performance.

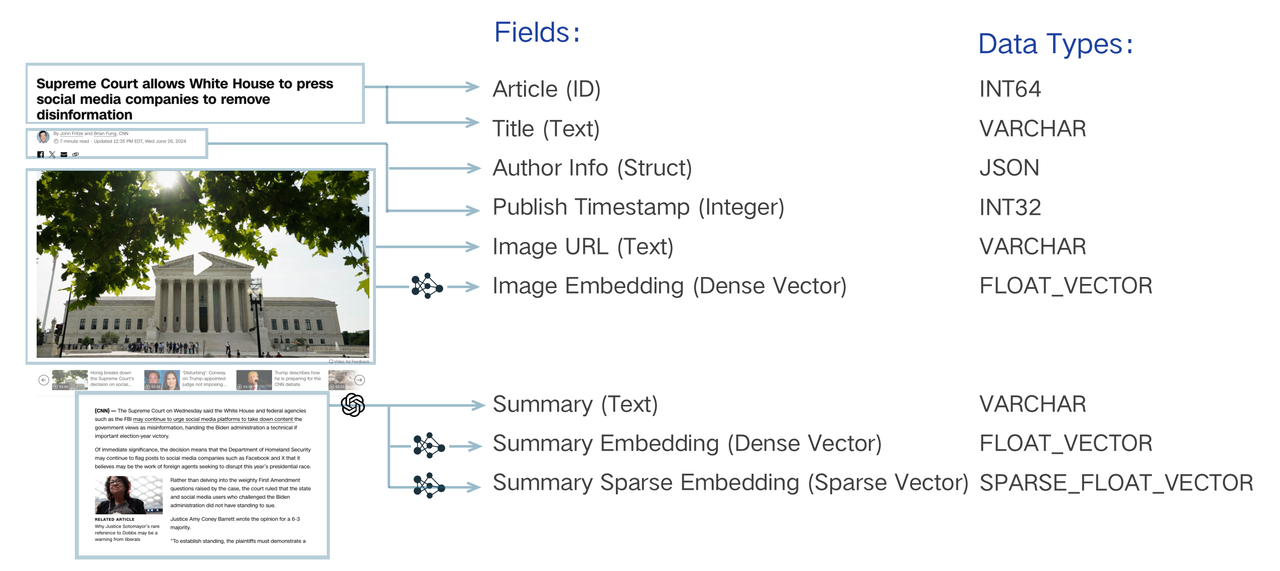

A collection schema has a primary key, a maximum of four vector fields, and serveral scalar fields. The following diagram illustrates how to map an article to a list of schema fields.

The data model design of a search system involves analyzing business needs and abstracting information into a schema-expressed data model. For instance, searching a piece of text must be "indexed" by converting the literal string into a vector through "embedding" and enabling vector search. Beyond this essential requirement, storing other properties such as publication timestamp and author may be necessary. This metadata allows for semantic searches to be refined through filtering, returning only texts published after a specific date or by a particular author. You can also retrieve these scalars with the main text to render the search result in the application. Each should be assigned a unique identifier to organize these text pieces, expressed as an integer or string. These elements are essential for achieving sophisticated search logic.

Refer to Schema Design Hands-On to figure out how to make a well-designed schema.

# Create Schema

The following code snippet demonstrates how to create a schema.

from pymilvus import MilvusClient, DataType

schema = MilvusClient.create_schema()

2

3

# Add Primary Field

The primary field in a collection uniquely identifies an entity. It only accepts Int64 or VarChar values. The following code snippets demonstrate how to add the primary field.

schema.add_field(

field_name="my_id",

"datatype=DataType.INT64",

is_primary=True,

auto_id=False,

)

2

3

4

5

6

When adding a field, you can explicitly clarify the field as the primary field by setting its is_primary property to True. A primary field accepts Int64 values by default. In this case, the primary field value should be integers similar to 12345. If you choose to use VarChar values in the primary field, the value should be strings similar to my_entity_1234.

You can also set the autoId properties to True to make Zilliz Cloud automatically allocate primary field values upon data insertions.

For details, refer to Primary Field & AutoId.

# Add Vector Fields

Vector fields accept various sparse and dense vector embeddings. On Zilliz Cloud, you can add four vector fields to a collection. The following code snippets demonstrate how to add a vector field.

schema.add_field(

field_name="my_vector",

datatype=DataType.FLOAT_VECTOR,

dim=5

)

2

3

4

5

The dim parameter in the above code snippets indicates the dimensionality of the vector embeddings to be held in the vector field. The FLOAT_VECTOR value indicates that the vector field holds a list of 32-bit floating numbers, which are usually used to represent antilogarithms. In addition to that, Zilliz Cloud also supports the following types of vector embeddings:

FLOAT16_VECTOR: A vector field of this type holds a list of 16-bit half-precision floating numbers and usually applies to memory-or-bandwidth-restricted deep learning or GPU-based computing scenarios.BFLOAT16_VECTOR: A vector field of this type holds a list of 16-bit floating-point numbers that have reduced precision but the same exponent range as Float32. This type of data is commonly used in deep learning scenarios, as it reduces memory usage without significantly impacting accuracy.INT8_VECTOR: A vector field of this type stores vectors composed of 8-bit signed integers (int8), with each component ranging from -128 to 127. Tailored or quantized deep learning architectures -- such as ResNet and EfficientNet -- it substantially shrinks model size and boosts inference speed, all while incurring only minimal precision loss. Note: This vector type is supported only for HNSW indexes.BINARY_VECTOR: A vector field of this type holds a list of 0s and 1s. They serve as compact features for representing data in image processing and information retrieval scenarios.SPARSE_FLOAT_VECTOR: A vector field of this type holds a list of non-zero numbers and their sequence numbers to represent sparse vector embeddings.

# Add Scalar Fields

In common cases, you can use scalar fields to store the metadata of the vector embeddings stored in Milvus, and conduct ANN searches with metadata filtering to improve the correctness of the search results. Zilliz Cloud supports multiple scalar field types, including VarChar, Boolean, Int, Float, Double, Array, and JSON.

# Add String Fields

In Milvus, you can use VarChar fields to store strings. For more on the VarChar field, refer to String Field.

schema.add_field(

field_name="my_varchar",

datatype=DataType.VARCHAR,

max_length=512

)

2

3

4

5

# Add Number Fields

The types of numbers that Milvus supports are Int8, Int16, Int32, Int64, Float, and Double. For more on the number fields, refer to Number Field.

schema.add_field(

field_name="my_int64",

datatype=DataType.INT64

)

2

3

4

# Add Boolean Fields

Milvus supports boolean fields. The following code snippets demonstrate how to add a boolean field.

schema.add_field(

field_name="my_bool",

datatype=DataType.BOOL,

)

2

3

4

# Add JSON fields

A JSON field usually stores half-structured JSON data. For more on the JSON fields, refer to JSON Field.

schema.add_field(

field_name="my_json",

datatype=DataType.JSON,

)

2

3

4

# Add Array Fields

An array field stores a list of elements. The data types of all elements in an array field should be the same. For more on the array fields, refer to Array Field.

schema.add_field(

field_name="my_array",

datatype=DataType.ARRAY,

element_type=DataType.VARCHAR,

max_capacity=5,

max_length=512,

)

2

3

4

5

6

7

# Primary Field & AutoID

The primary field uniquely identifies an entity. This page introduces how to add the primary field of two different data types and how to enable Milvus to automatically allocate primary field values.

# Overview

In a collection, the primary key of each entity should be globally unique. When adding the primary field, you need to explicitly set its data type to VARCHAR or INT64. Setting its data type to INT64 indicates that the primary keys should be an integer similar to 12345; Setting its data type to VARCHAR indicates that the primary keys should be a string similar to my_entity_1234.

You can also enable AutoID to make Milvus automatically allocate primary keys for incoming entities. Once you have enabled AutoID in your collection, do not include primary keys when inserting entities.

The primary field in a collection does not have a default value and cannot be null.

NOTE

- A standard

insertoperation with a primary key that already exists in the collection will not overwrite the old entry. Instead, it will create a new, separate entity with the same primary key. This can lead to unexpected search results and data redundancy. - If your use case involves updating existing data or you suspect that the data you are inserting may already exist, it is highly recommended to use the upsert operation. The upsert operation will intelligently update the entity if the primary key exists, or insert a new one if it does not. For more details, refer to Upsert Entities.

# Use Int64 Primary Keys

To use primary keys of the Int64 type, you need to set datatype to DataType.INT64 and set is_primary to true. If you also need Milvus to allocate the primary keys for the incoming entities, also set auto_id to true.

from pymilvus import MilvusClient, DataType

schema = MilvusClient.create_schema()

schema.add_field(

field_name="my_id",

datatype=DataType.INT64,

is_primary=True,

auto_id=True,

)

2

3

4

5

6

7

8

9

10

# Use VarChar Primary Keys

To use VarChar primary keys, in addition to changing the value of the data_type parameter to DataType.VARCHAR, you also need to set the max_length parameter for the field.

schema.add_field(

field_name="my_id",

datatype=DataType.VARCHAR,

is_primary=True,

auto_id=True,

max_length=512,

)

2

3

4

5

6

7

# Dense Vector

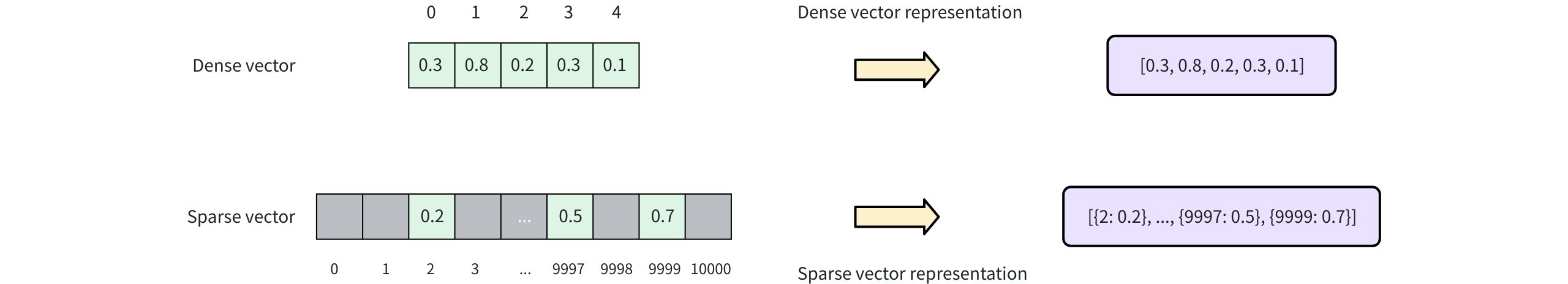

Dense vectors are numerical data representations widely used in machine learning and data analysis. The consist of arrays with real numbers, where most or all elements are non-zero. Compared to sparse vectors, dense vectors contain more information at the same dimensional level, as each dimonsion holds meaningful values. This representation can effectively capture complex patterns and relationships, making data easier to analyze and process in high-dimensional spaces. Dense vectors typically have a fixed number of dimensions, ranging from a few dozen to several hundred or even thousands, depending on the specific application and requirements.

Dense vectors are mainly used in scenarios that require understanding the semantics of data, such as semantic search and recommendation systems. In semantic search, dense vectors help capture the underlying connections between queries and documents, improving the relevance of search results. In recommendation systems, they aid in identifying similarities between users and items, offering more personalized suggestions.

# Overview

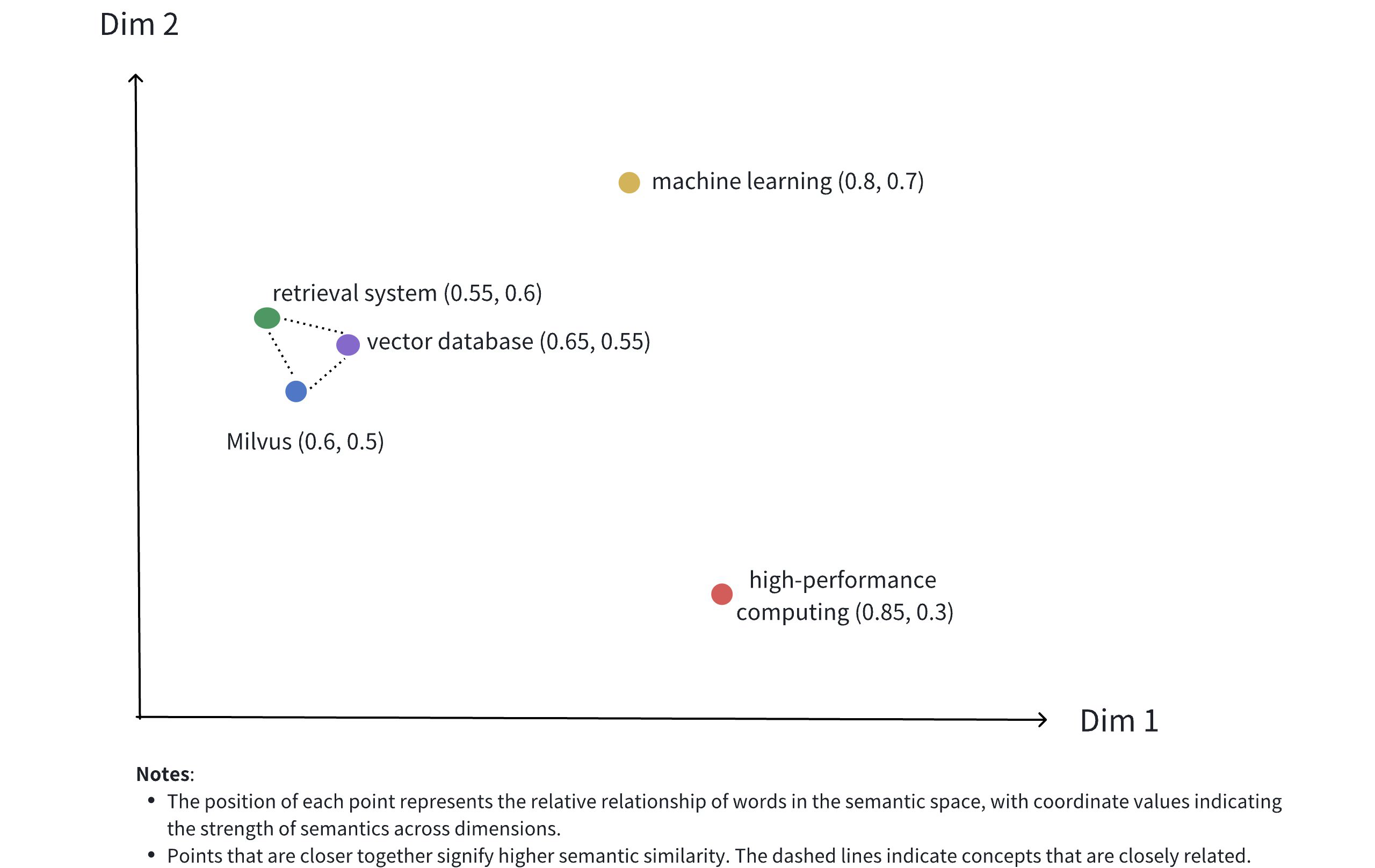

Dense vectors are typically represented as arrays of floating-point numbers with a fixed length, such as [0.2, 0.7, 0.1, 0.8, 0.3, ..., 0.5]. The dimensionality of these vectors usually ranges from hundreds to thousands, such as 128,256,768, or 1024. Each dimension captures specific semantic features of an object, making it applicable to various scenarios through similarity calculations.

The image above illustrates the representation of dense vectors in a 2D space. Although dense vectors in real-world applications often have much higher dimensions, this 2D illustration effectively conveys serveral concepts:

- Multidimensional Representation: Each point represents a conceptual object (like Milvus, vector database, retrieval system, etc.), with its position determined by the values of its dimensions.

- Semantic Relationships: The distances between points reflect the semantic similarity between concepts. Closer points indicate concepts that are more semantically related.

- Clustering Effect: Related concepts (such as Milvus, vector database, and retrieval system) are positioned close to each other in space, forming a semantic cluster.

Below is an example of a real dense vector representing the text "Milvus is an efficient vector database":

[

-0.013052909,

0.020387933,

-0.007869,

-0.11111383,

-0.030188112,

-0.0053388323,

0.0010654867,

0.072027855,

# ... more dimensions

]

2

3

4

5

6

7

8

9

10

11

Dense vectors can be generated using various embedding models, such as CNN models (like ResNet, VGG) for images and language models (like BERT, Word2Vec) for text. These models transform raw data into points in high-dimensional space, capturing the semantic features of the data. Additionally, Milvus offers convenient methods to help users generate and process dense vectors, as detailed in Embeddings.

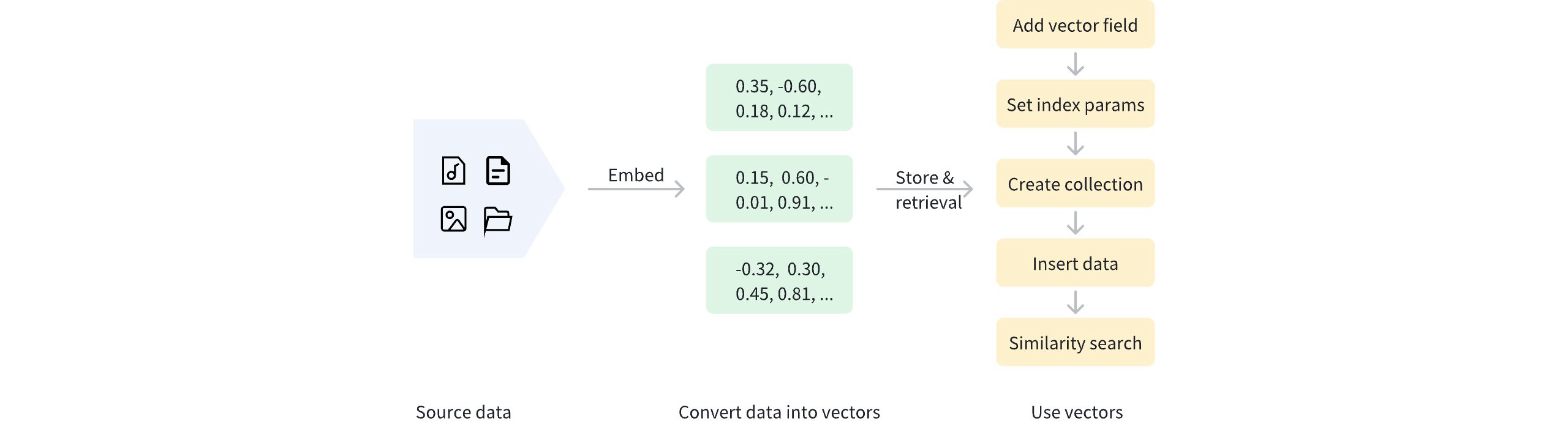

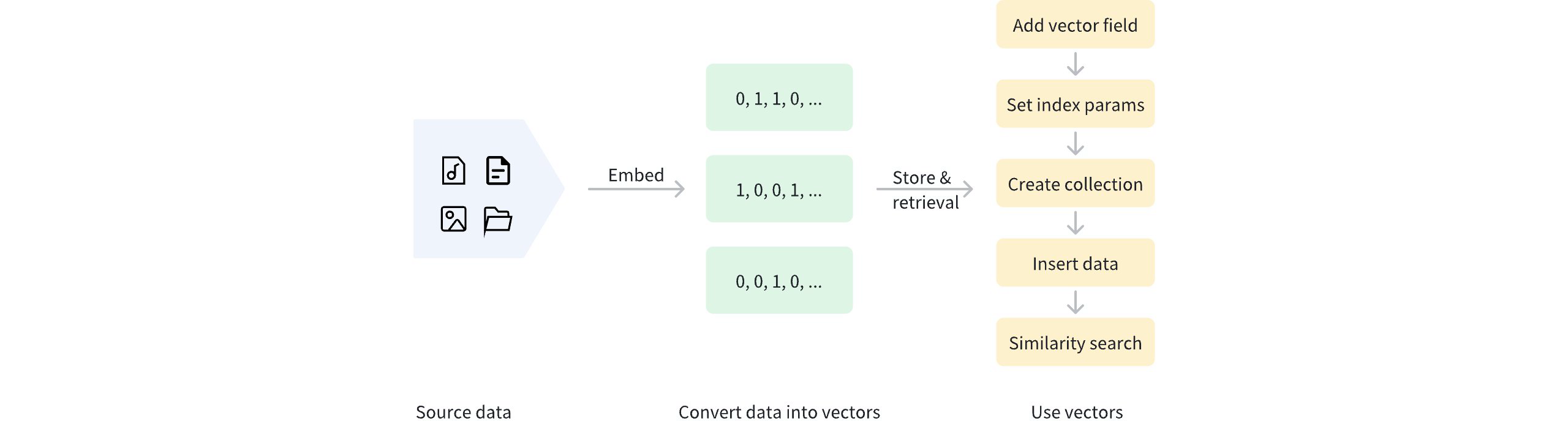

Once data is vectorized, it can be stored in Milvus for management and vector retrieval. The diagram below shows the basic process.

NOTE

Besides dense vectors, Milvus also supports sparse vectors and binary vectors. Sparse vectors are suitable for precise matches based on specific terms, such as keyword search and term matching, while binary vectors are commonly used for efficiently handling binarized data, such as image pattern matching and certain hashing applications. For more information, refer to Binary Vector and Sparse Vector.

# Use dense vectors

# Add vector field

To use dense vectors in Milvus, first define a vector field for storing dense vectors when creating a collection. This process includes:

- Setting

datatypeto a supported dense vector data type. For supported dense vector data types, see Data Types. - Specifying the dimensions of dense vector using the

dimparameter.

In the example below, we add a vector field named dense_vector to store dense vectors. The field's data type is FLOAT_VECTOR, with a demension of 4.

from pymilvus import MilvusClient, DataType

client = MilvusClient(uri="http://localhost:19530")

schema = client.create_schema(

auto_id=True,

enable_dynamic_fields=True,

)

schema.add_field(field_name="pk", datatype=DataType.VARCHAR, is_primary=True, max_length=100)

schema.add_field(field_name="dense_vector", datatype=DataType.FLOAT_VECTOR, dim=4)

2

3

4

5

6

7

8

9

10

11

Supported data types for dense vector fields:

| Data Type | Description |

|---|---|

FLOAT_VECTOR | Stores 32-bit floating-point numbers, commonly used for representing real numbers in scientific computations and machine learning. Ideal for scenarios requiring high precision, such as distinguishing similar vectors. |

FLOAT16_VECTOR | Stores 16-bit half-precision floating-point numbers, used for deep learning and GPU computations. It saves storage space in scenarios where precision is less critical, such as in the low-precision recall phase of recommendation systems. |

BFLOAT_16_VECTOR | Stores 16-bit Brain Floating Point (bfloat16) numbers, offering the same range of exponents as Float32 but with reduced precision. Suitable for scenarios that need to process large volumes of vectors quickly, such as large-scale image retrieval. |

INT8_VECTOR | Stores vectors whose individual elements in each dimension are 8-bit integers (int8), with each element ranging from -128 to 127. Designed for quantized deep learning models (e.g., ResNet, EfficientNet), INT8_VECTOR reduces model size and speeds up inference with minimal precision loss. Note: This vector type is supported only for HNSW indexes. |

# Set index params for vector field

To accelerate semantic searches, an index must be created for the vector field. Indexing can significantly improve the retrieval efficiency of large-scale data.

index_params = client.prepare_index_params()

index_params.add_index(

field_name="dense_vector",

index_name="dense_vector_index",

index_type="AUTOINDEX",

metric_type="IP"

)

2

3

4

5

6

7

8

In the example above, an index named dense_vector_index is created for the dense_vector field using the AUTOINDEX index type. The metric_type is set to IP, indicating that inner product will be used as the distance metric.

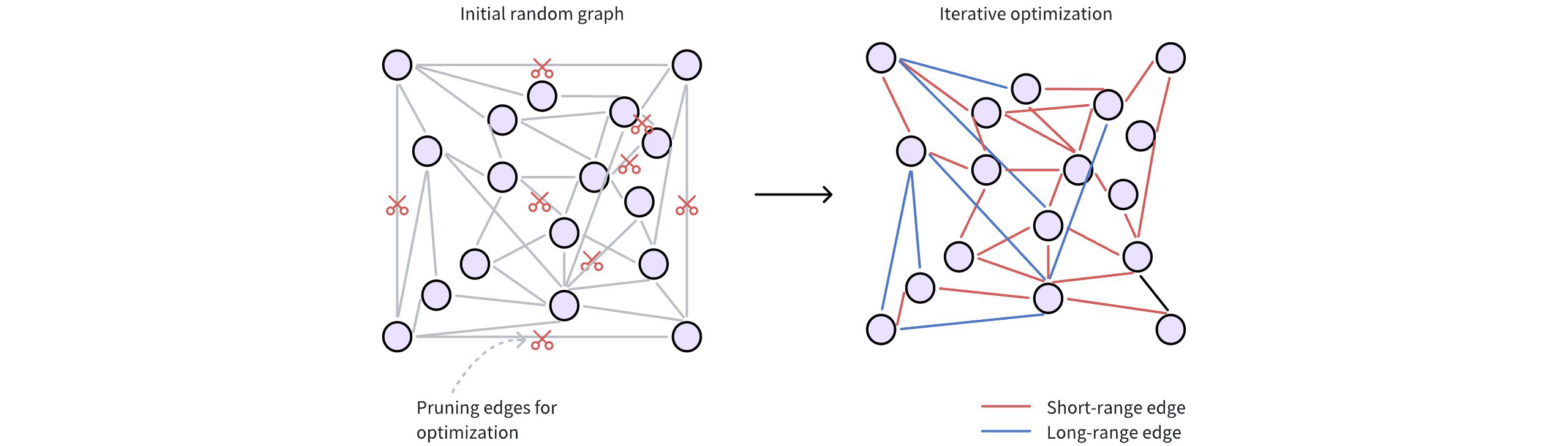

Milvus provides various index types for a better vector search experience. AUTOINDEX is a special index type designed to smooth the learning curve of vector search. There are a lot of index types available for you to choose from.

Milvus supports other metric types. For more information, refer to Metric Types.

# Create collection

Once the dense vector and index param settings are complete, you can create a collection containing dense vectors. The example below uses the create_collection method to create a collection named my_collection.

client.create_collection(

collection_name="my_collection",

schema=schema,

index_params=index_params

)

2

3

4

5

# Insert data

After creating the collection, use the insert method to add data containing dense vectors. Ensure that the dimensionality of the dense vectors being inserted matches the dim value defined when adding the dense vector field.

data = [

{"dense_vector": [0.1, 0.2, 0.3, 0.7]},

{"dense_vector": [0.2, 0.3, 0.4, 0.8]},

]

client.insert(

collection_name="my_collection",

data=data

)

2

3

4

5

6

7

8

9

# Perform similarity search

Semantic search based on dense vectors is one of the core features of Milvus, allowing you to quickly find data that is most similar to a query vector based on the distance between vectors. To perform a similarity search, prepare the query vector and search parameters, then call the search method.

search_params = {

"params": {"nprobe": 10}

}

query_vector = [0.1, 0.2, 0.3, 0.7]

res = client.search(

collection_name="my_collection",

data=[query_vector],

anns_field="dense_vector",

search_params=search_params,

limit=5,

output_field=["pk"]

)

print(res)

# Output

# data: [

# "[

# {

# 'id': '453718927992172271',

# 'distance': 0.7599999904632568,

# 'entity': {'pk': '453718927992172271'}

# },

# {

# 'id': '453718927992172270',

# 'distance': 0.6299999952316284,

# 'entity': {'pk': '453718927992172270'}

# }

# ]"

# ]

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

For more information on similarity search parameters, refer to Basic ANN Search.

# Binary Vector

Binary vectors are a special form of data representation that convert traditional high-dimensional floating-point vectors into binary vectors containing only 0s and 1s. This transformation not only compresses the size of the vector but also reduces storage and computational costs while retaining semantic information. When precision for non-critical features is not essential, binary vectors can effectively maintain most of the integrity and utility of the original floating-point vectors.

Binary vectors have a wide range of applications, particularly in situations where computational efficiency and storage optimization are crucial. In large-scale AI systems, such as search engines or recommendation systems, real-time processing of massive amounts of data is key. By reducing the size of the vectors, binary vectors help lower latency and computational costs without significantly sacrificing accuracy. Additional, binary vectors are usefual in resource constrained environments, such as mobile devices and embedded systems, where memory and processing power are limited. Through the use of binary vectors, complex AI functions can be implemented in these restricted settings while maintaining high performance.

# Overview

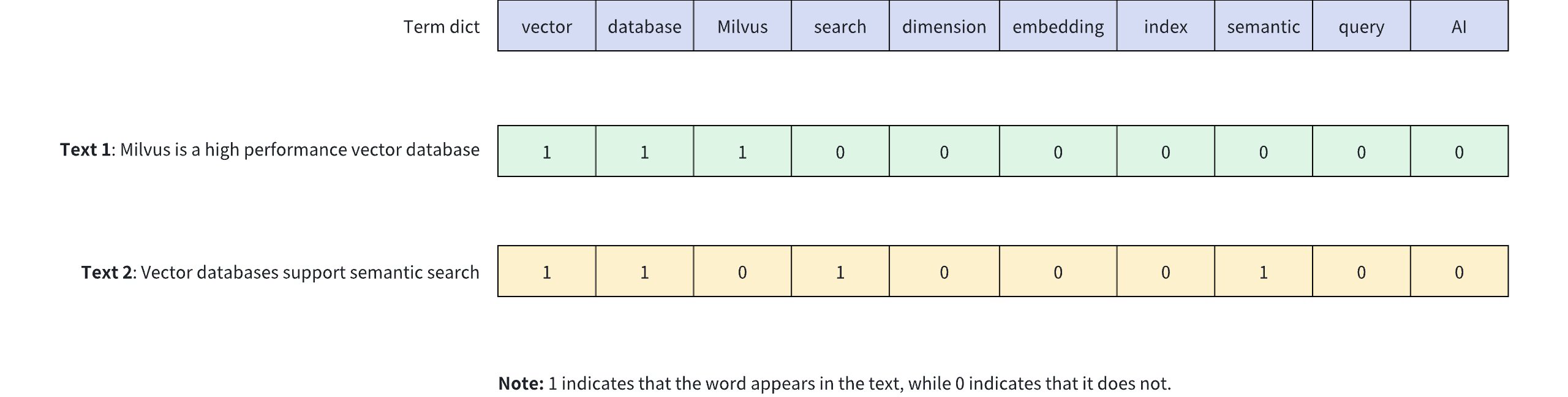

Binary vectors are a method of encoding complex objects (like images, text, or audio) into fixed-length binary values. In Milvus, binary vectors are typically represented as bit arrays or byte arrays. For example, an 8-dimensional binary vector can be represented as [1, 0, 1, 1, 0, 0, 1, 0].

The diagram below shows how binary vectors represent the presence of keywords in text content. In this example, a 10-dimensional binary vector is used to represent two different texts (Text 1 and Text 2), where each dimension corresponds to a word in the vocabulary: 1 indicates the presence of the word in the text, while 0 indicates its absence.

Binary vectors have the following characteristics:

- Efficient Storage: Each dimension requires only 1 bit of storage, significantly reducing storage space.

- Fast Computation: Similarity between vectors can be quickly calculated using bitwise operations like XOR.

- Fixed Length: The length of the vector remains constant regardless of the original text length, making indexing and retrieval easier.

- Simple and Intuitive: Directly reflects the presence of keywords, making it suitable for certain specialized retrieval tasks.

Binary vectors can be generated through various methods. In text processing, predefined vocabularies can be used to set corresponding bits based on word presence. For image processing, perceptual hashing algorithms (like pHash) can generate binary features of images. In machine learning applications, model outputs can be binarized to obtain binary vector representations.

After binary vectorization, the data can be stored in Milvus for management and vector retrieval. The diagram below shows the basic process.

NOTE

Although binary vectors excel in specific scenarios, they have limitations in their expressive capability, making it difficult to capture complex semantic relationships. Therefore, in real-world scenarios, binary vectors are often used alongside other vector types to balance efficiency and expressiveness. For more information, refer to Dense Vector and Sparse Vector.

# Use binary vectors

# Add vector field

To use binary vectors in Milvus, first define a vector field for storing binary vectors when creating a collection. This process includes:

- Setting

datatypeto the supported binary vector data type, i.e.,BINARY_VECTOR. - Specifying the vector's dimensions using the

dimparameter. Note thatdimmust be a multiple of 8 as binary vectors must be converted into a byte array when inserting. Every 8 boolean values (0 or 1) will be packed into 1 byte. For example, ifdim=128, a 16-byte arra is required for insertion.

from pymilvus import MilvusClient, DataType

client = MilvusClient(uri="http://localhost:19530")

schema = client.create_schema(

auto_id=True,

enable_dynamic_fields=True,

)

schema.add_field(field_name="pk", datatype=DataType.VARCHAR, is_primary=True, max_length=100)

schema.add_field(field_name="binary_vector", datatype=DataType.BINARY_VECTOR, dim=128)

2

3

4

5

6

7

8

9

10

11

In this example, a vector field named binary_vector is added for storing binary vectors. The data type of this field is BINARY_VECTOR, with a dimension of 128.

# Set index params for vector field

To Speed up searches, an index must be created for the binary vector field. Indexing can significantly enhance the retrieval efficiency of large-scale vector data.

index_params = client.prepare_index_params()

index_params.add_index(

field_name="binary_vector",

index_name="binary_vector_index",

index_type="AUTOINDEX",

metric_type="HAMMING"

)

2

3

4

5

6

7

8

In the example above, an index named binary_vector_index is created for the binary_vector field, using the AUTOINDEX index type. The metric_type is set to HAMMING, indicating that Hamming distance is used for similarity measurement.

Milvus provides various index types for a better vector search experience. AUTOINDEX is a special index type designed to smooth the learning curve of vector search. There are a lot of index types available for you to choose from. For details, refer to Index Explained.

Additionally, Milvus supports other similarity metrics for binary vectors. For more information, refer to Metric Types.

# Create collection

Once the binary vector and index settings are complete, create a collection that contains binary vectors. The example below uses the create_collection method to create a collection named my_collection.

client.create_collection(

collection_name="my_collection",

schema=schema,

index_params=index_params

)

2

3

4

5

# Insert data

After creating the collection, use the insert method to add data containing binary vectors. Note that binary vectors should be provided in the form of a byte array, where each byte represents 8 boolean values.

For example, for a 128-dimensional binary vector, a 16-byte array is required (since 128 bits ÷ 8 bits/byte = 16 bytes). Below is an example code for inserting data:

def convert_bool_list_to_bytes(bool_list):

if len(bool_list) % 8 != 0:

raise ValueError("The length of a boolean list must be a multiple of 8")

byte_array = bytearray(len(bool_list) // 8)

for i, bit in enumerate(bool_list):

if bit == 1:

index = i // 8

shift = i % 8

byte_array[index] |= (1 << shift)

return bytes(byte_array)

bool_vectors = [

[1, 0, 0, 1, 1, 0, 1, 1, 0, 1, 0, 1, 0, 1, 0, 0] + [0] * 112,

[0, 1, 0, 1, 0, 1, 0, 0, 1, 1, 0, 0, 1, 1, 0, 1] + [0] * 112,

]

data = [{"binary_vector": convert_bool_list_to_bytes(bool_vector) for bool_vector in bool_vectors}]

client.insert(

collection_name="my_collection",

data=data

)

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

# Perform similarity search

Similarity search is one of the core features of Milvus, allowing you to quickly find data that is most similar to a query vector based on the distance between vectors. To perform a similarity search using binary vectors, prepare the query vector and search parameters, the call the search method.

During search operations, binary vectors must also be provided in the form of a byte array. Ensure that the dimensionality of the query vector matches the dimension specified when defining dim and that every 8 boolean values are converted into 1 byte.

search_params = {

"params": {"nprobe": 10}

}

query_bool_list = [1, 0, 0, 1, 1, 0, 1, 1, 0, 1, 0, 1, 0, 1, 0, 0] + [0] * 112

query_vector = convert_bool_list_to_bytes(query_bool_list)

res = client.search(

collection_name="my_collection",

data=[query_vector],

anns_field="binary_vector",

search_params=search_params,

limit=5,

output_fields=["pk"]

)

print(res)

# Output

# data: ["[{'id': '453718927992172268', 'distance': 10.0, 'entity': {'pk': '453718927992172268'}}]"]

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

For more information on similarity search parameters, refer to Basic ANN Search.

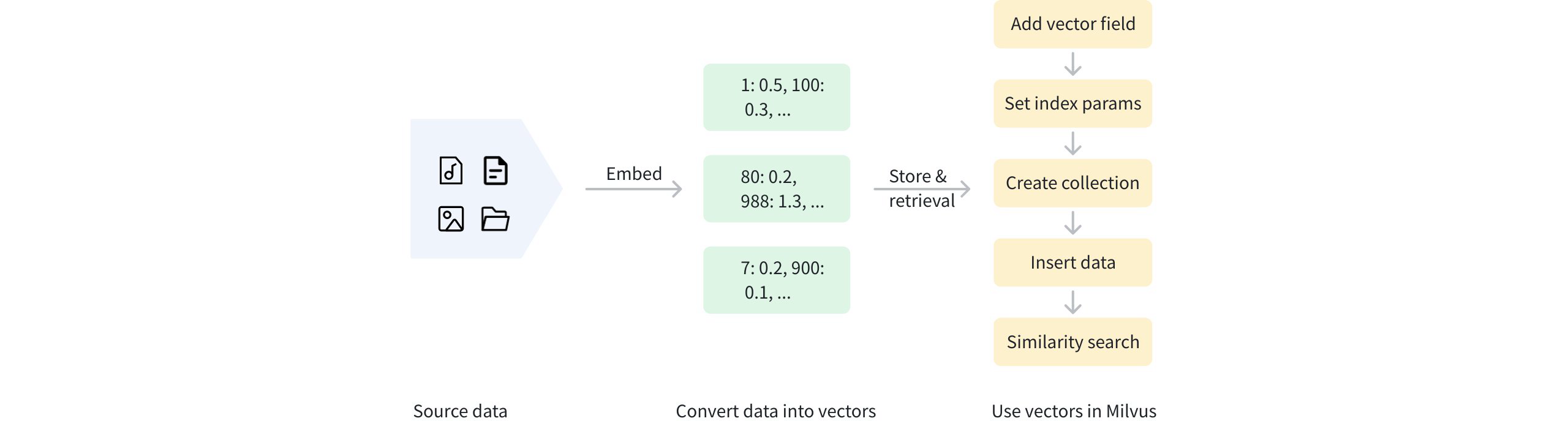

# Sparse Vector

Sparse vectors are an important method of capturing surface-level term matching in information retrieval and natural language processing. While dense vectors excel in semantic understanding, sparse vectors often provide more predictable matching results, especially when searching for special terms or textual identifiers.

# Overview

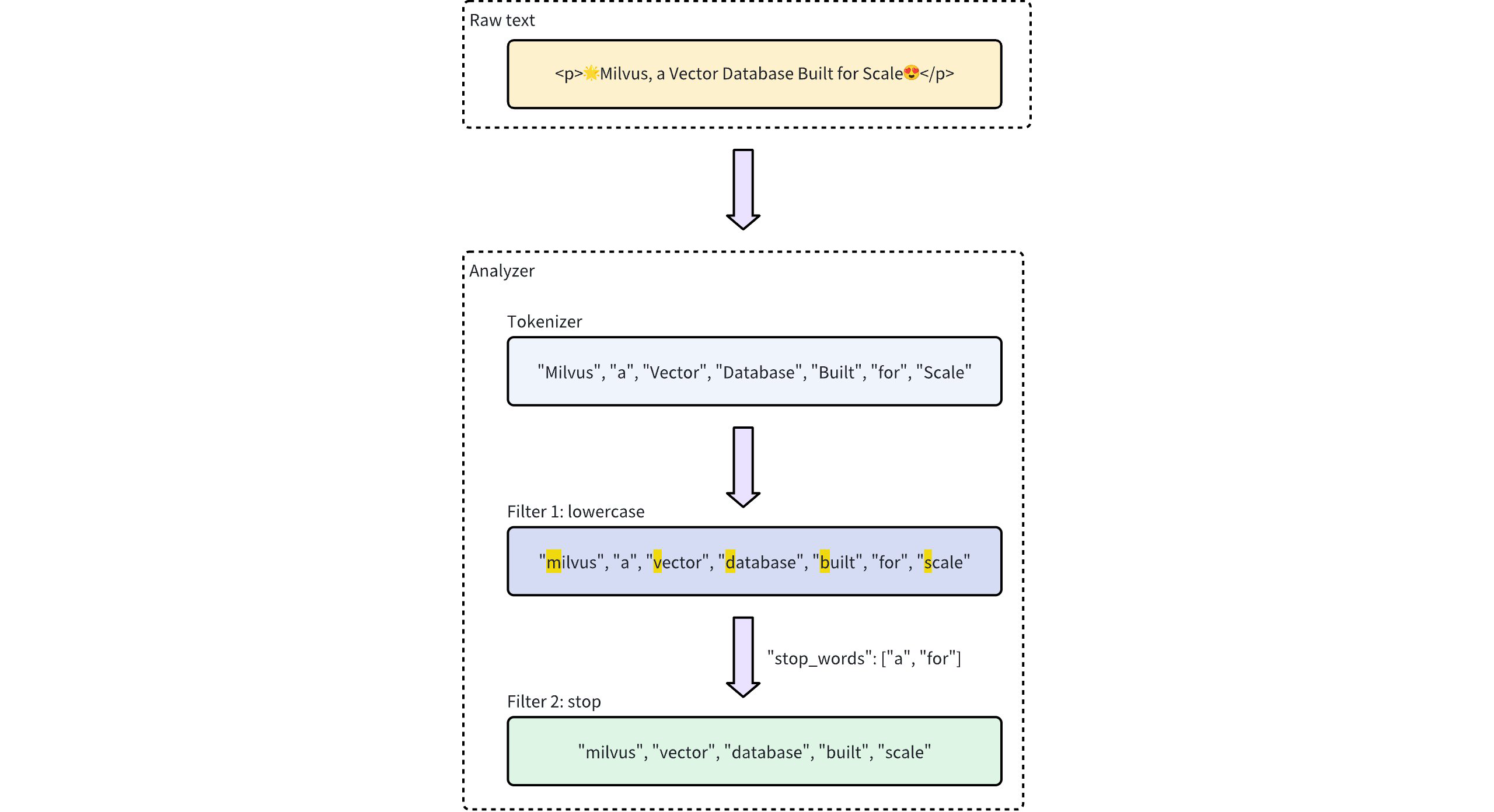

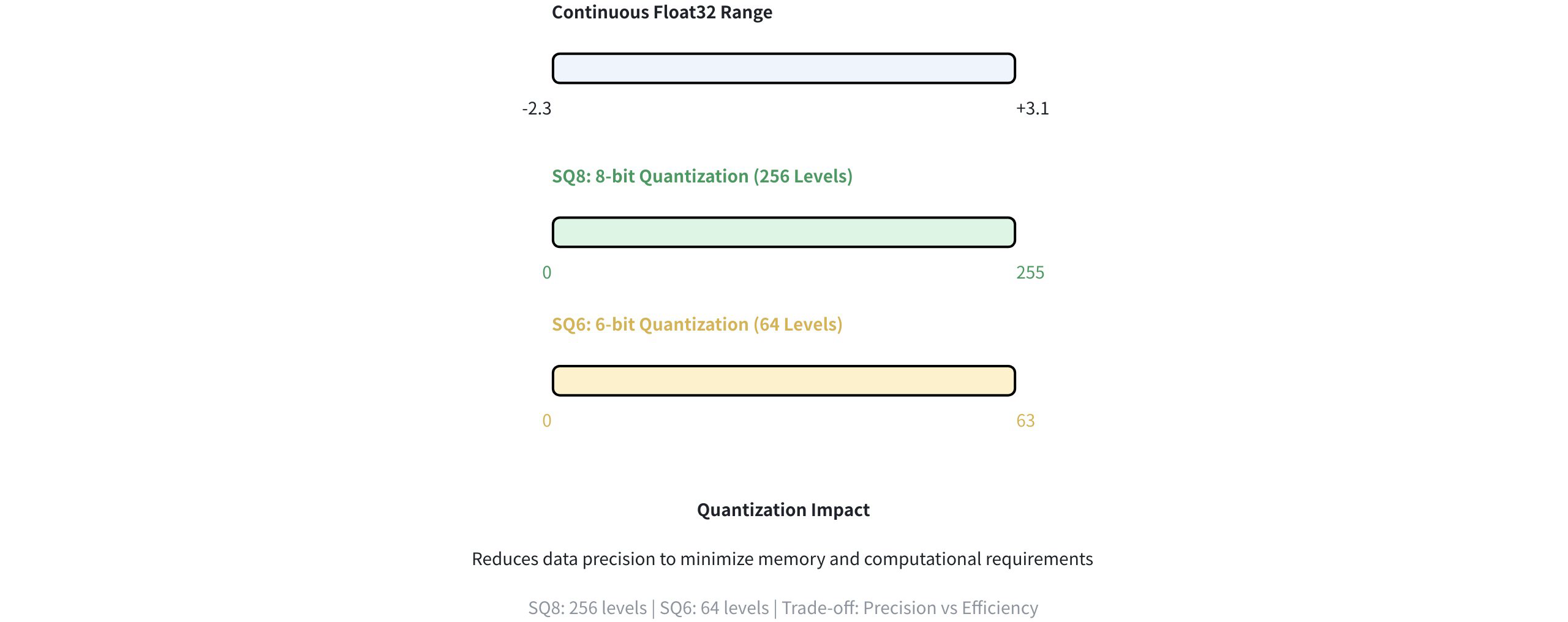

A sparse vector is a special high-dimensional vector where most elements are zero, and only a few dimensions have non-zero values. As shown in the diagram below, dense vectors are typically represented as continuous arrays where each position has a value (e.g., [0.3, 0.8, 0.2, 0.3, 0.1]). In contrast, sparse vectors store only non-zero elements and their indices of the dimension, often represented as key-value pairs of { index: value } (e.g., [{2: 0.2}, ..., {9997: 0.5}, {9999: 0.7}]).

With tokenization and scoring, documents can be represented as bag-of-words vectors, where each dimension corresponds to a specific word in the vocabulary. Only the words present in the document have non-zero values, creating a sparse vector representation. Sparse vectors can be generated using two approaches:

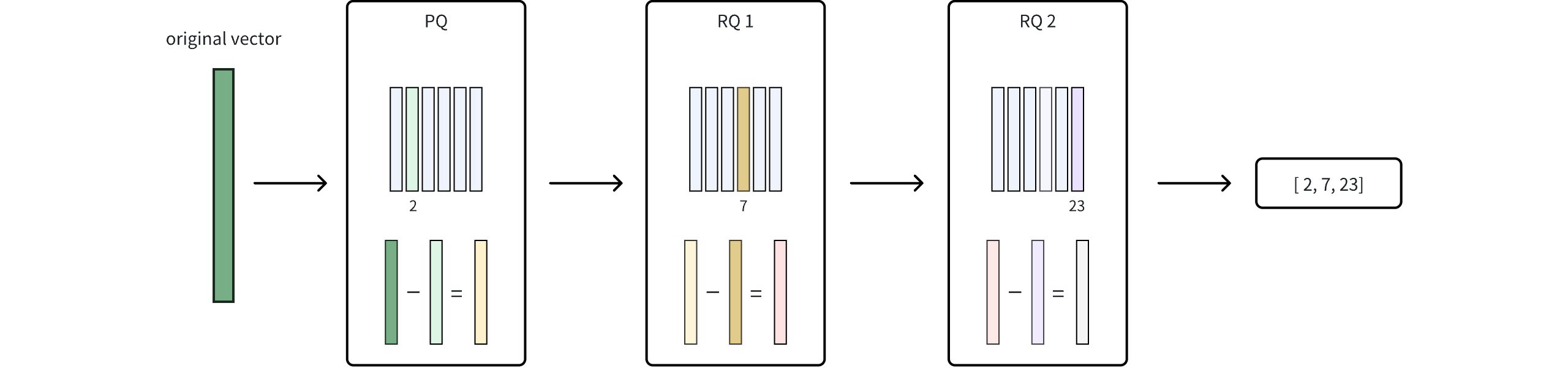

- Traditional statistical techniques: such as TF-IDF(Term Frequency-Inverse Document Frequency) and BM25(Best Matching 25), assign weights to words based on their frequency and importance across a corpus. These methods compute simple statistics as scores for each dimension, which represents a token. Milvus provides built-in full-text search with the BM25 method, which automatically converts text into sparse vectors, eliminating the need for manual preprocessing. This approach is ideal for keyword-based search, where precision and exact matches are important. Refer to Full Text Search for more information.

- Neural sparse embedding models: are learned methods to generate sparse representations by training on large datasets. They are typically deep learning models with Transformer architecture, able to expand and weigh terms based on semantic context. Milvus also supports externally generated sparse embeddings from models like SPLADE. See Embeddings for details.

Sparse vectors and the original text can be stored in Milvus for efficient retrieval. The diagram below outlines the overall process.

NOTE

In addition to sparse vectors, Milvus also supports dense vectors and binary vectors. Dense vectors are ideal for capturing deep semantic relationships, while binary vectors excel in scenarios like quick similarity comparisions and content deduplication. For more information, refer to Dense Vector and Binary Vector.

# Data Formats

In the following sections, we demonstrate how to store vectors from learned sparse embedding models like SPLADE. If you are looking form something to complement dense-vector-based semantic search, we recommend Full Text Search with BM25 over SPLADE for simplicity. If you're ran quality evaluation and dediced to use SPLADE, you can refer to Embeddings on how to generate sparse vectors with SPLADE.

Milvus supports sparse vector input with the following formats:

- List of Dictionaries (formatted as

{dimension_index: value, ...})

# Represent each sparse vector using a dictionary

sparse_vectors = [{27: 0.5, 100: 0.3, 5369: 0.6} , {100: 0.1, 3: 0.8}]

2

- Sparse Matrix (using the

scipy.sparseclass)

from scipy.sparse import csr_matrix

# First vector: indices [27, 100, 5369] with values [0.5, 0.3, 0.6]

# Second vector: indices [3, 100] with values [0.8, 0.1]

indices = [[27, 100, 5369], [3, 100]]

values = [[0.5, 0.3, 0.6], [0.8, 0.1]]

sparse_vectors = [csr_matrix((vals, ([0]*len(idx), idx)), shape=(1, 5369+1)) for idx, vals in zip(indices, values)]

2

3

4

5

6

7

- List of Tuple Iterables (e.g.

[(dimension_index, value)])

# Represent each sparse vector using a list of iterables (e.g. tuples)

sparse_vector = [

[(27, 0.5), (100, 0.3), (5369, 0.6)],

[(100, 0.1), (3, 0.8)]

]

2

3

4

5

# Define Collection Schema