Milvus Concepts

# Architecture

# Milvus Architecture Overview

Milvus is an open-source, cloud-native vector database designed for high-performance similarity search on massive vector datasets. Built on top of popular vector search libraries including Faiss, HNSW, DiskANN, and SCANN, it empowers AI application and unstructured data retrieval scenarios. Before proceeding, familiarize yourself with the basic principles of embedding retrieval.

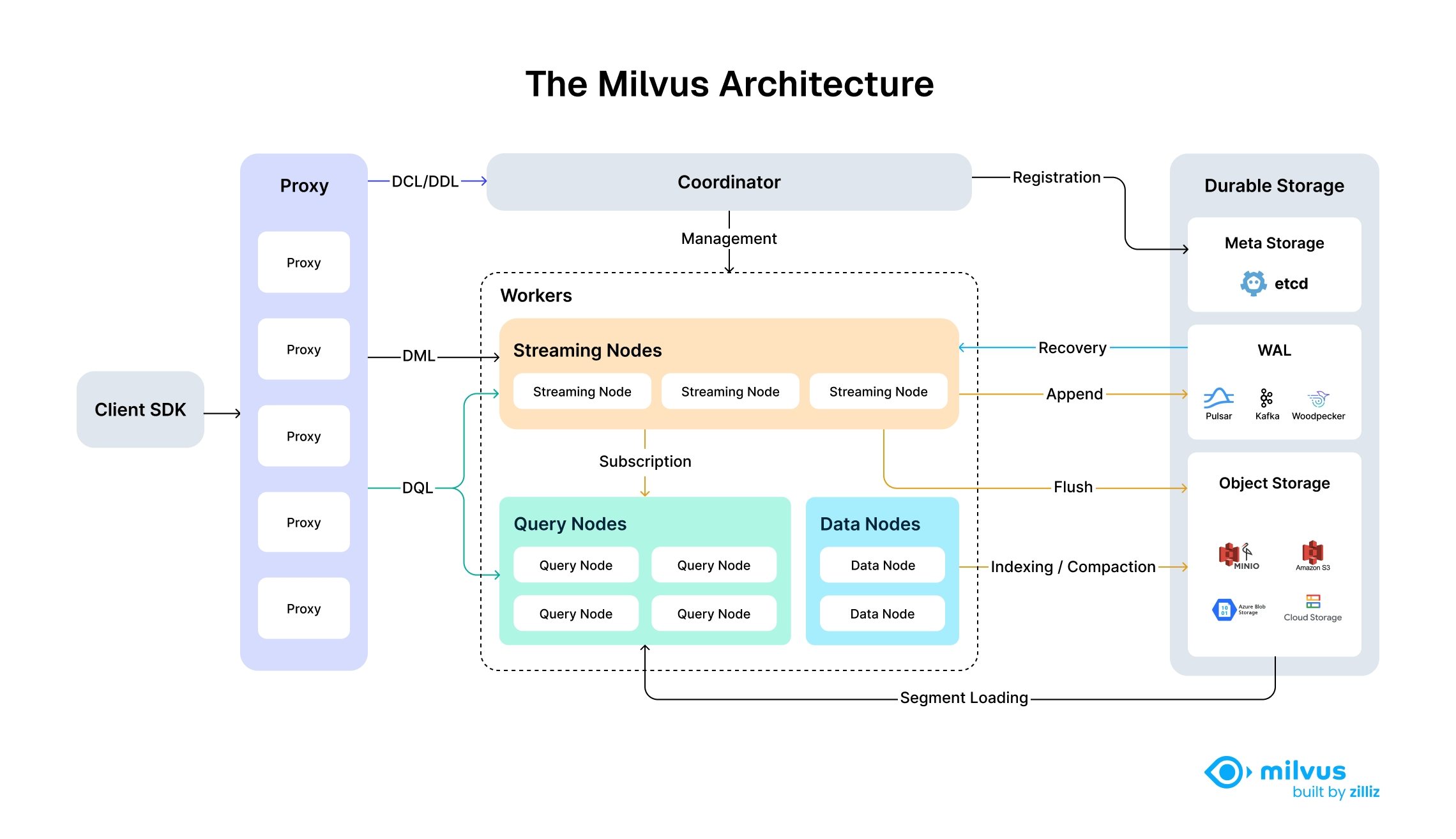

# Architecture Diagram

The following diagram illustrates Milvus's high-level architecture, showcasing its modular, scalable, and cloud-native design with fully disaggregated storage and compute layers.

# Architectural Principles

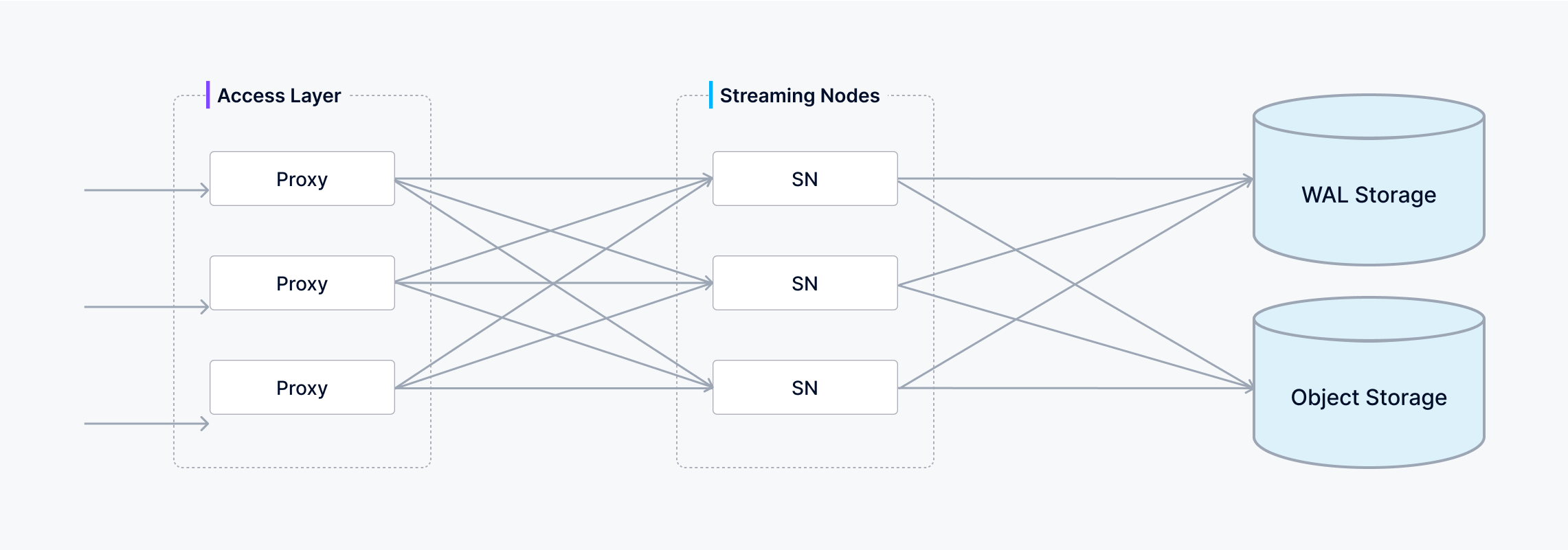

Milvus follows the principle of data plane and control plane disaggregation, comprising four main layers that are mutually idependent in terms of scalability and disaster recovery. This shared-storage architecture with fully disaggregated storage and compute layers enables horizontal scaling of compute nodes while implementing Woodpecker as a zero-disk WAL layer for increased elasticity and reduced operational overhead.

By separating stream processing into Streaming Node and batch processing into Query Node and Data Node, Milvus archieves high performance while meeting real-time processing requirements simultaneously.

# Detailed Layer Architecture

# Layer 1: Access Layer

Composed of a group of stateless proxies, the access layer is the front layer of the system and endpoint to users. It validates client requests and reduces the returned results:

- Proxy is in itself stateless. It provides a unified service address using load balancing components such as Nginx, Kubernetes Ingress, NodePort, and LVS.

- As Milvus employs a massively parallel processing (MPP) architecture, the proxy aggregates and post-process the intermediate results before returning the final results to the client.

# Layer 2: Corrdinator

The Coordinator serves as the brain of Milvus. At any moment, exactly one Coordinator is active across the entire cluster, responsible for maintaining the cluster topology, scheduling all task types, and promising cluster-level consistency.

The following are some of the tasks handled by the Coordinator:

- DDL/DCL/TSO Management: Handles data definition language (DDL) and data control language (DCL) requests, such as creating or deleting collections, partitions, or indexes, as well as managing timestamp Oracle (TSO) and time ticker issuing.

- Streaming Service Management: Binds the Write-Ahead Log (WAL) with Streaming Nodes and provides service discovery for the streaming service.

- Query Management: Manages topology and load balancing for the Query Nodes, and provides and manages the serving query views to guide the query routing.

- Historical Data Management: Distributes offline tasks such as compaction and index-building to Data Nodes, and manages the topology of segments and data views.

# Layer 3: Worker Nodes

The arms and legs. Worker nodes are dumb executors that following instructions from the coordinator. Worker nodes are stateless thanks to separation of storage and computation, and can facilitate system scale-out and disaster recovery when deployed on Kubernetes. There are three types of worker nodes:

# Streaming node

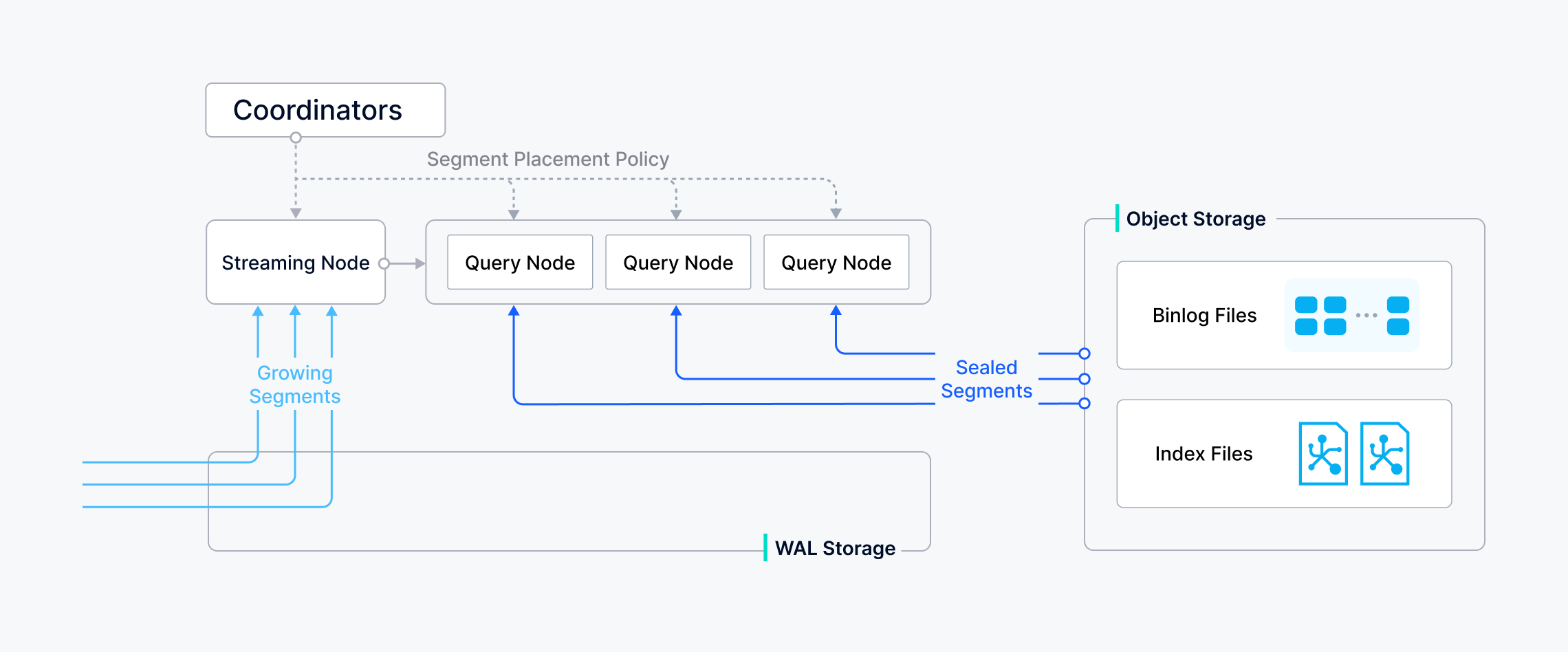

Streaming Node serves as the shard-level "mini-brain", providing shard-level consistency guarantees and fault recovery based on underlying WAL Storage, Streaming Node is also responsible for growing data querying and generating query plans. Additonally, it also handles the conversion of growing data into sealed (historical) data.

# Query node

Query node loads the historical data from object storage, and provides the historical data querying.

# Data node

Data node is responsible for offline processing of historical data, such as compaction and index building.

# Layer 4: Storage

Storage is the bone of the system, responsible for data persistence. It comprises meta storage, log broker, and object storage.

# Meta storage

Meta storage stores snapshots of metadata such as collection schema, and message consumption checkpoints. Storing metadata demands extremely high availability, strong consistency, and transaction support, so Milvus chose etcd for meta store. Milvus also uses etcd for service registration and health check.

# Object storage

Object storage stores snapshot files of logs, index files for scalar and vector data, and intermediate query results. Milvus uses MinIO as obejct storage and can be readily deployed on AWS S3 and Azure Blob, two of the world's most popular, cost-effective storage services. However, object storage has high access latency and charges by the number of queries. To improve its perfomance and lower the costs, Milvus plans to implement cold-hot data separation on a memory or SSD-based cache pool.

# WAL storage

Write-Ahead Log (WAL) storage is the foundation of data durability and consistency in distributed systems. Before any change is commited, it's first recorded in a log-ensuring that, in the event of a failure, you can recover exactly where you left off.

Common WAL implementations include Kafka, Pulsar, and Woodpecker. Unlike traditional disk-based solutions, Woodpecker adopts a cloud-native, zero-disk design that writes directly to object storage. This approach scales effortlessly with your needs and implifies operations by removing the overhead of managing local disks.

By logging every write operation ahead of time, the WAL layer guarantees a reliable, system-wide mechanism for recovery and consistency -- no matter how complex your distributed environment grows.

# Data Flow and API Categories

Milvus APIs are categorized by their function and follow specific paths through the architecture:

| API Category | Operations | Example APIs | Architecture Flow |

|---|---|---|---|

| DDL/DCL | Schema & Access Control | createCollection, dropCollection, hasCollection, createPartition | Access Layer -> Coordinator |

| DML | Data Manipulation | insert, delete, upsert | Access Layer -> Streaming Worker Node |

| DQL | Data Query | search, query | Access Layer -> Batch Worker Node (Query Nodes) |

# Example Data Flow: Search Operation

- Client sends a search request via SDK/RESTful API

- Load Balancer routes request to available Proxy in Access Layer

- Proxy uses routing cache to determine target nodes; contacts Coordinator only if cache is unavailable

- Proxy forwards request to appropriate Streaming Nodes, which then coordinate with Query Nodes for sealed data search while executing growing data search locally

- Query Nodes load sealed segments from Object Storage as needed and perform segment-level search

- Search results undergo multi-level reduction: Query Nodes reduce results across multiple segments, Streaming Nodes reduce results from Query Nodes, and Proxy reduces results from all Streaming Nodes before returning to client.

# Example Data Flow: Data Insertion

- Client sends an insert request with vector data

- Access Layer validates and forwards request to Streaming Node

- Streaming Node logs operation to WAL Storage for durability

- Data is processed in real-time and made available for queries

- When segments reach capacity, Streaming Node triggers conversion to sealed segments

- Data Node handles compaction and builds indexes on top of the sealed segments, storing results in Object Storage

- Query Nodes load the newly built indexes and replace the corresponding growing data

# Main Components

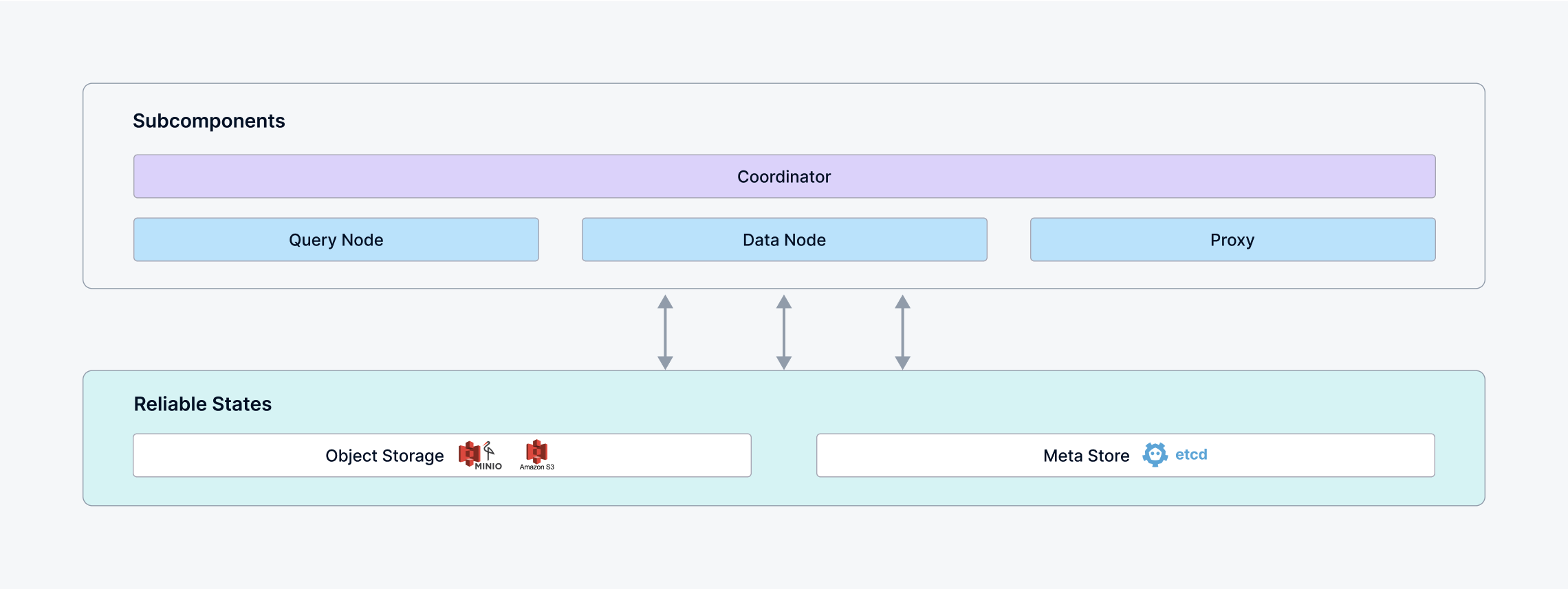

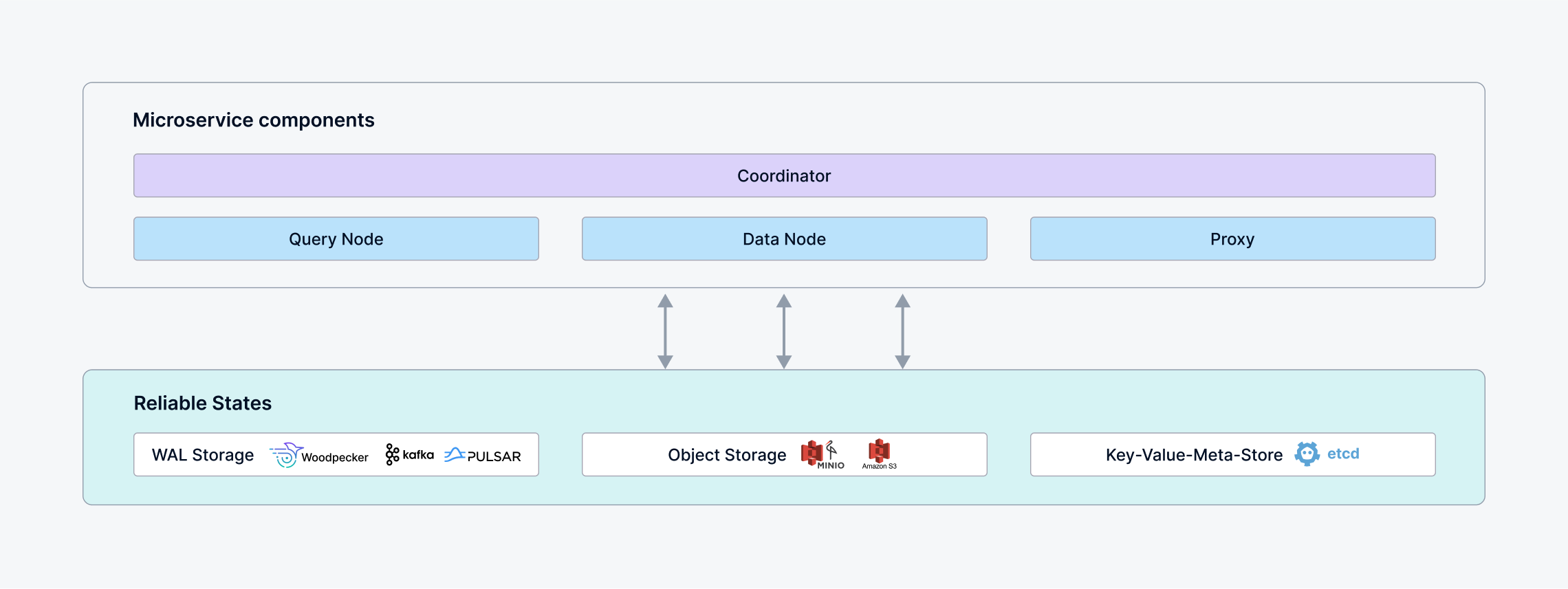

A Milvus cluster comprises five core components and three third-party dependencies. Each component can be deployed independently on Kubernetes:

# Milvus Components

- Coordinator: master-slave mode can be enabled to provide high availability.

- Proxy: one or more per cluster.

- Streaming Node: one or more per cluster.

- Query Node: one or more per cluster.

- Data Node: one or more per cluster.

# Third-party dependencies

- Meta Store: Stores metadata for various components in the milvus, e.g. etcd.

- Object Storage: Responsible for data persistence of large files in the milvus, such as index and binary log files, e.g. S3.

- WAL Storage: Provides Write-Ahead Log (WAL) service for the milvus, e.g. woodpecker.

- Under the woodpecker zero-disk mode, WAL directly use object storage and meta storage without other deployment, reducing third-party dependencies.

# Milvus deployment modes

There are two modes for running Milvus:

# Standalone

A single instance of Milvus that runs all components in one process, which is suitable for small datasets and low workload. Additionally, in standalone mode, simpler WAL implementation, such as woodpecker and rocksmq, can be chosen to eliminate the requirement for third-party WAL Storage dependencies.

Currently, you cannot perform an online upgrade from a standalone Milvus instance to a Milvus cluster, even if the WAL storage backend supports cluster mode.

# Cluster

A distributed deployment mode of Milvus where each component runs independently and can be scaled out for elasticity. This setup is suitable for large datasets and high-load scenarios.

# Streaming Service

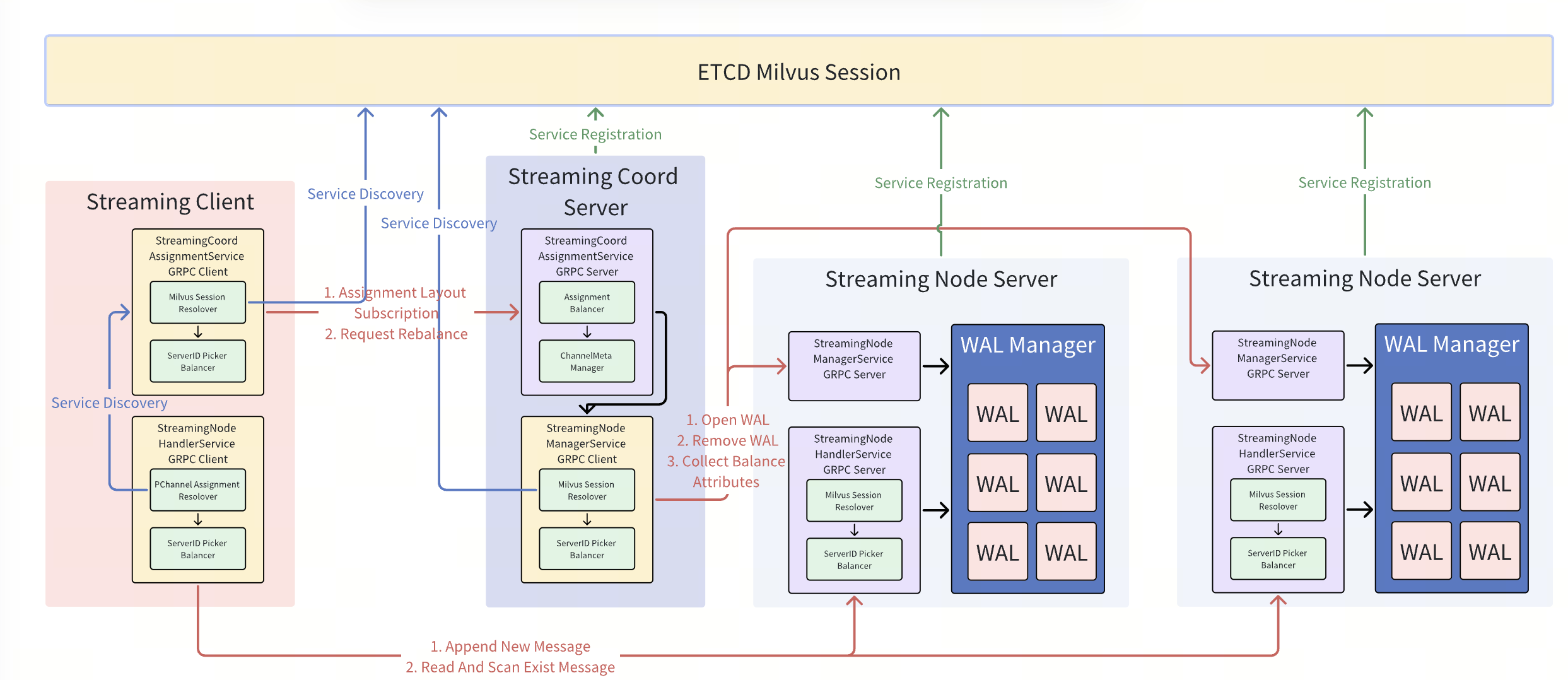

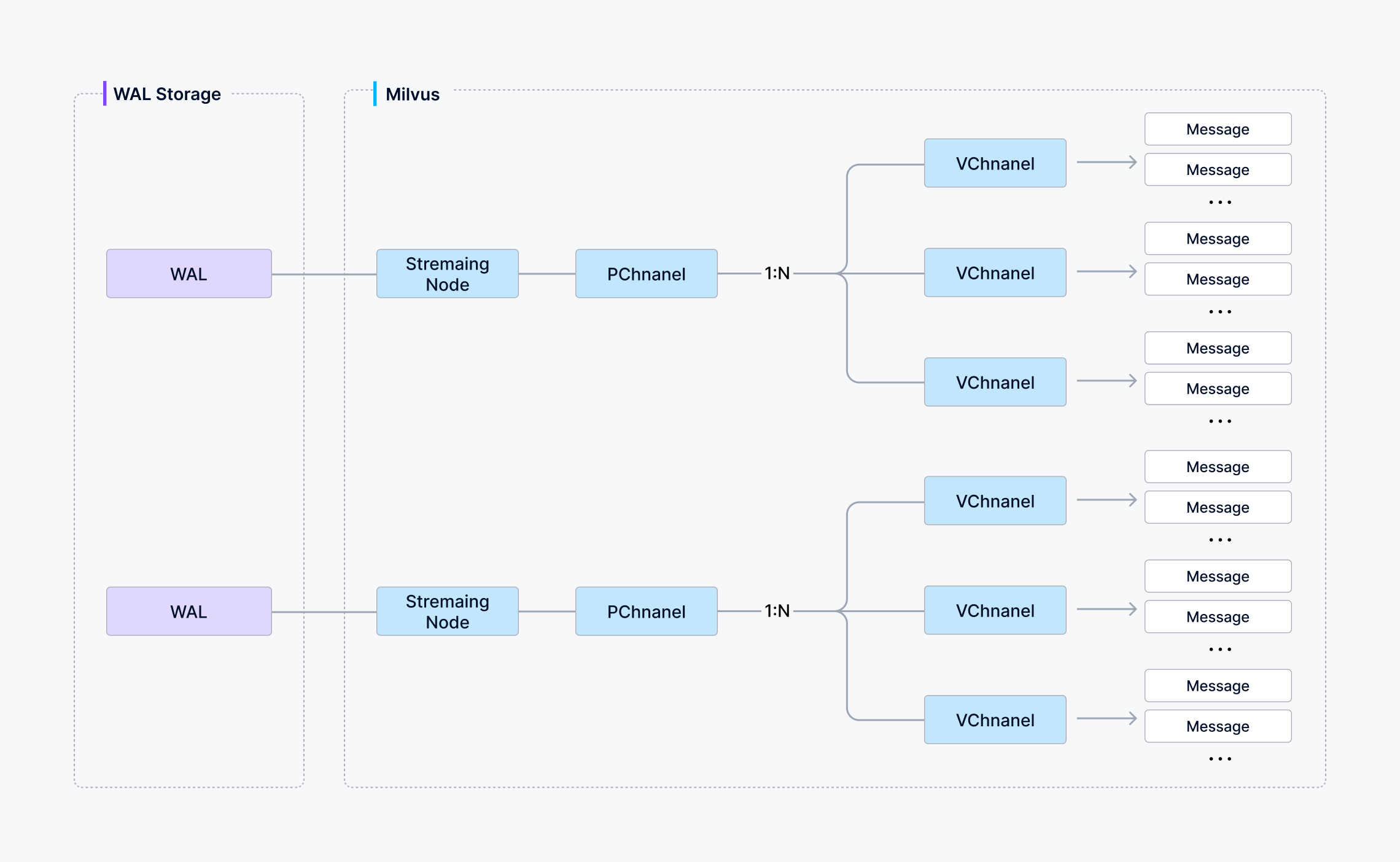

The Streaming Service is a concept for Milvus internal streaming system module, built around the Write-Ahead Log (WAL) to support various streaming-related function. These includes streaming data ingestion/subscription, fault recovery of cluster state, conversion of streaming data into historical data, and growing data queries. Architecturally, the Streaming Service is composed of three main components.

- Streaming Coordinator: A logical component in the coordinator node. It uses Etcd for service discovery to locate available streaming nodes and is responsible for binding WAL to the corresponding streaming nodes. It also registers service to expose the WAL distribution topology, allowing streaming clients to know the appropriate streaming node for a given WAL.

- Streaming Node Cluster: A cluster of streaming worker nodes responsible for all streaming-processing tasks, such as wal appending, state recovering, growing data querying.

- Streaming Client: An internally developed Milvus client that encapsulates basic functionalities such as service discovery and readiness checks. It is used to initiate operations such as message writing and subscription.

# Message

The Streaming Service is a log-driven streaming system, so all write operations in Milvus (such as DML and DDL) are abstracted as Messages.

- Every Message is assigned a Timestamp Oracle (TSO) field by the Streaming Service, which indicates the message's order in the WAL. The ordering of messages determines the order of write operations in Milvus. This makes it possible to reconstruct the latest cluster state from the logs.

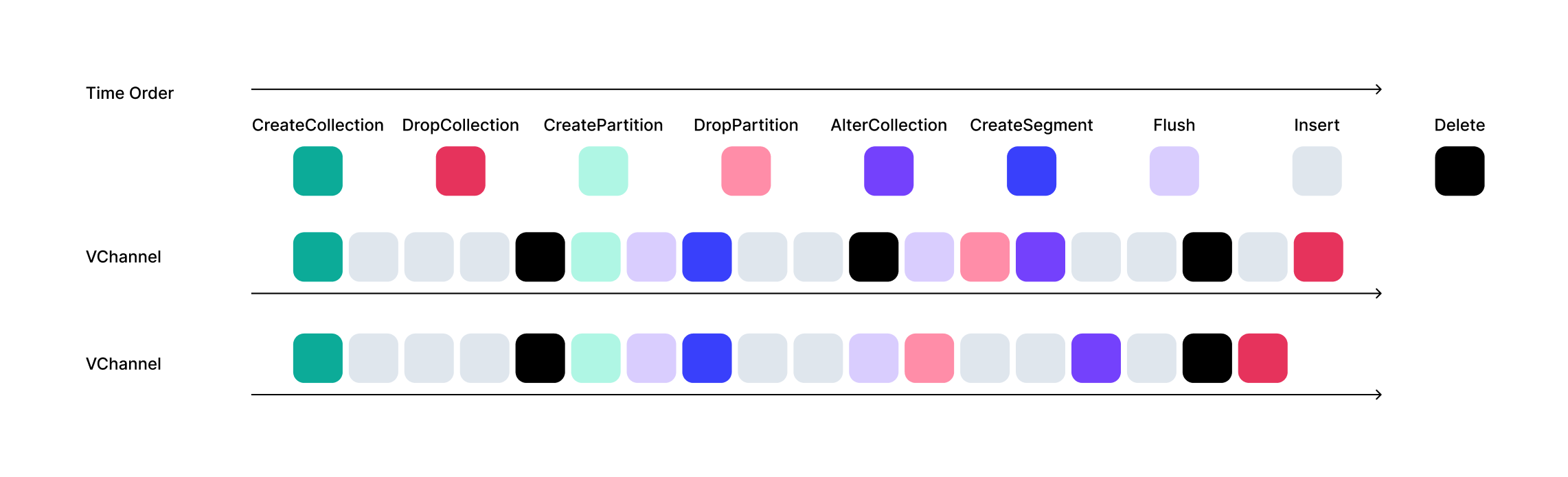

- Each Message belongs to a specific VChannel (Virtual Channel) and maintains certain invariant properties within that channel to ensure operation consistency. For example, an Insert operation must always occur before a DropCollection operation on the same channel.

The message order in Milvus may resemble the following:

# WAL Component

To support large-scale horizontal scalability, Milvus's WAL is not a single log file, but a composite of multiple logs. Each log can independently support streaming functionality for multiple VChannels. At any given time, a WAL component is allowed to operate on exactly one streaming node, these constraint is promised by both a fencing mechanism of underlying wal storage and the streaming coordinator.

Additional features of the WAL component include:

- Segment Lifecycle Management: Based on the policy such as memory conditions / segment size / segment idle time, the WAL manages the lifecycle of every segments.

- Basic Transaction Support: Since each message has a size limit, the WAL component supports simple transacton level to promise atomic writes at the VChannel level.

- High-Concurrency Remote Log Writing: Milvus supports third-party remote message queues as WAL storage. For mitigating the round-trip latency (RTT) between streaming node and remote WAL storage to improve write throughput, the streaming service supports concurrent log writes. It maintains message order by TSO and TSO synchronization, and the messages in WAL are read in TSO order.

- Write-Ahead Buffer: After messages are written to the WAL, they are temporarily stored in a Write-Ahead Buffer. This enables tail reads of logs without fetching messages from remote WAL storage.

- Multiple WAL Storage supports: Woodpecker, Pulsar, Kafka. Use woodpecker with zero-disk mode, we can remove the remote WAL storage dependency.

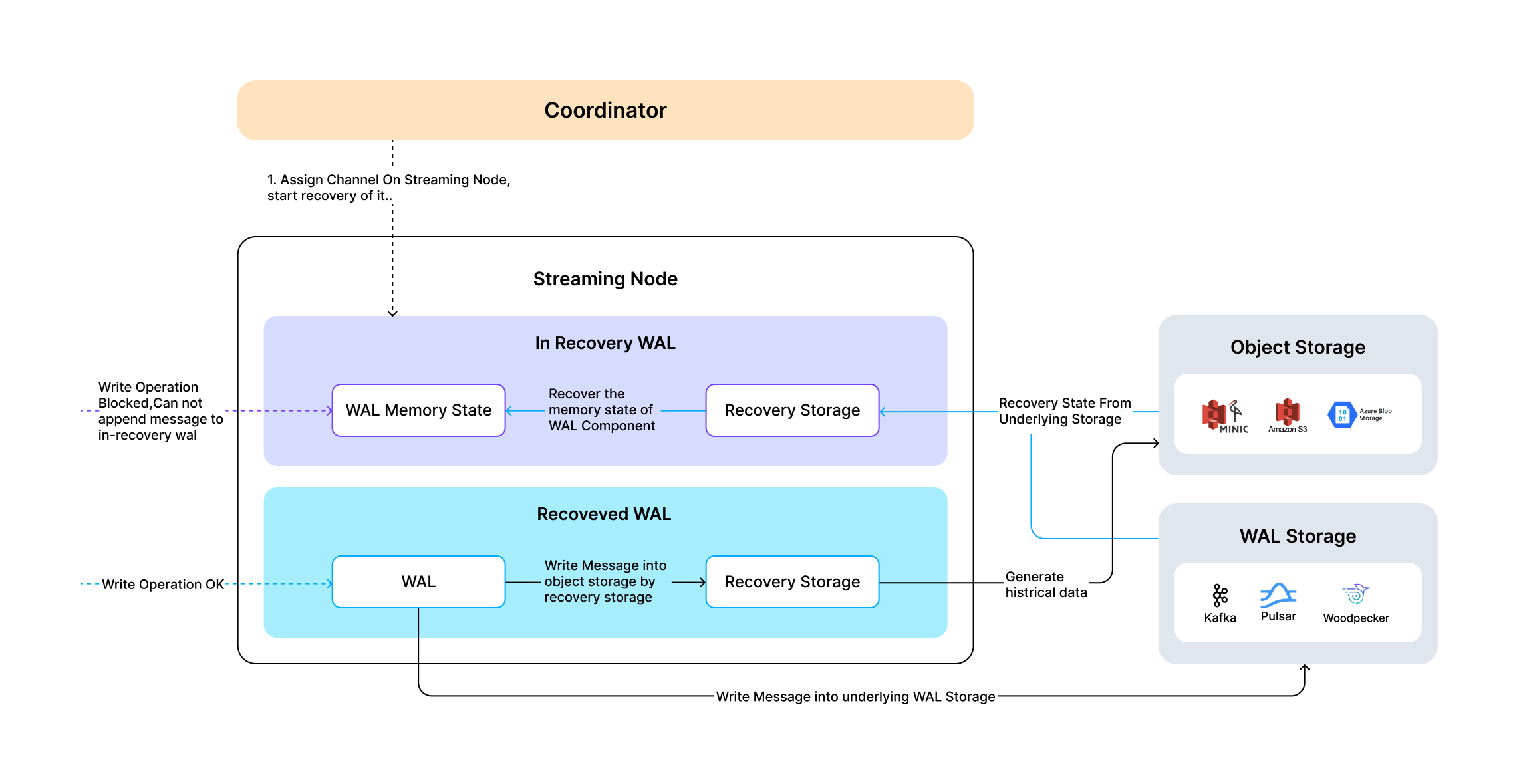

# Recovery Storage

The Recovery Storage component always runs on the streaming node that corresponding WAL component located.

- It is responsible for converting streaming data into persisted historical data and storing it in object storage.

- It also handles in-memory state recovery for the WAL component on the streaming node.

# Query Delegator

The Query Delegator runs on each streaming node and is responsible for executing incremental queries on a single shard. It generates query plans, forwards them to the relevant Query Nodes, and aggregates the results.

In addition, the Query Delegator is responsible for broadcasting Delete operations to other Query Nodes.

The Query Delegator always coexists with the WAL component on the same streaming node. But if the collection is configured with multi-replica, then N-1 Delegators will be deployed on the other streaming nodes.

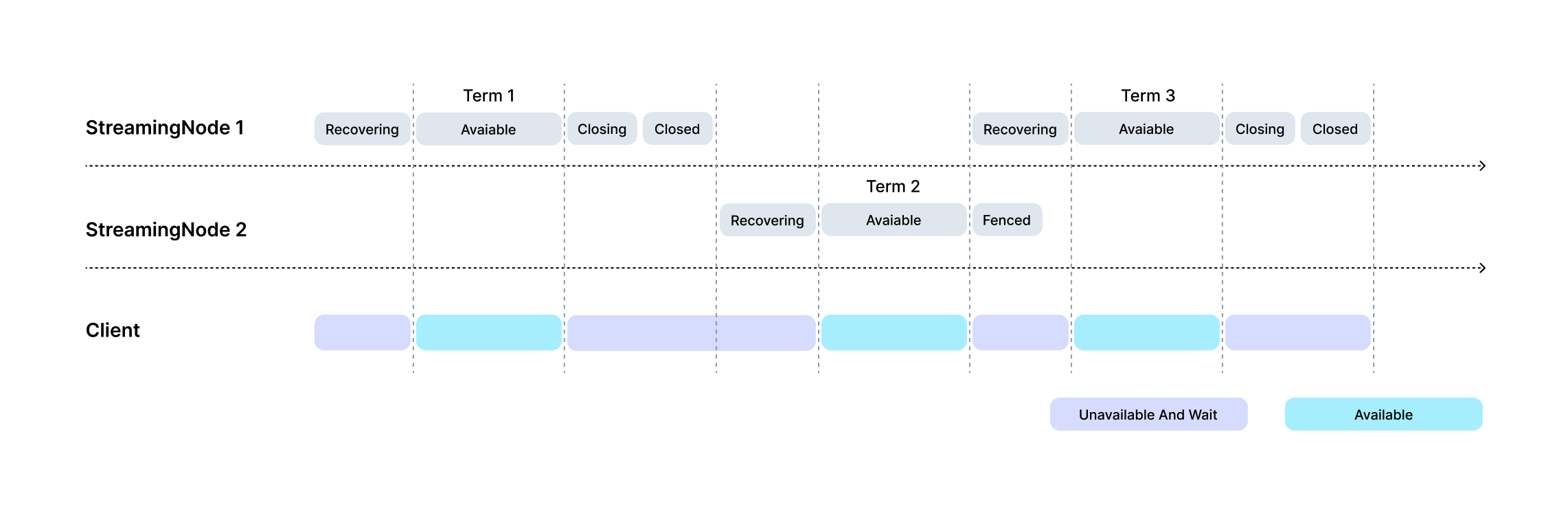

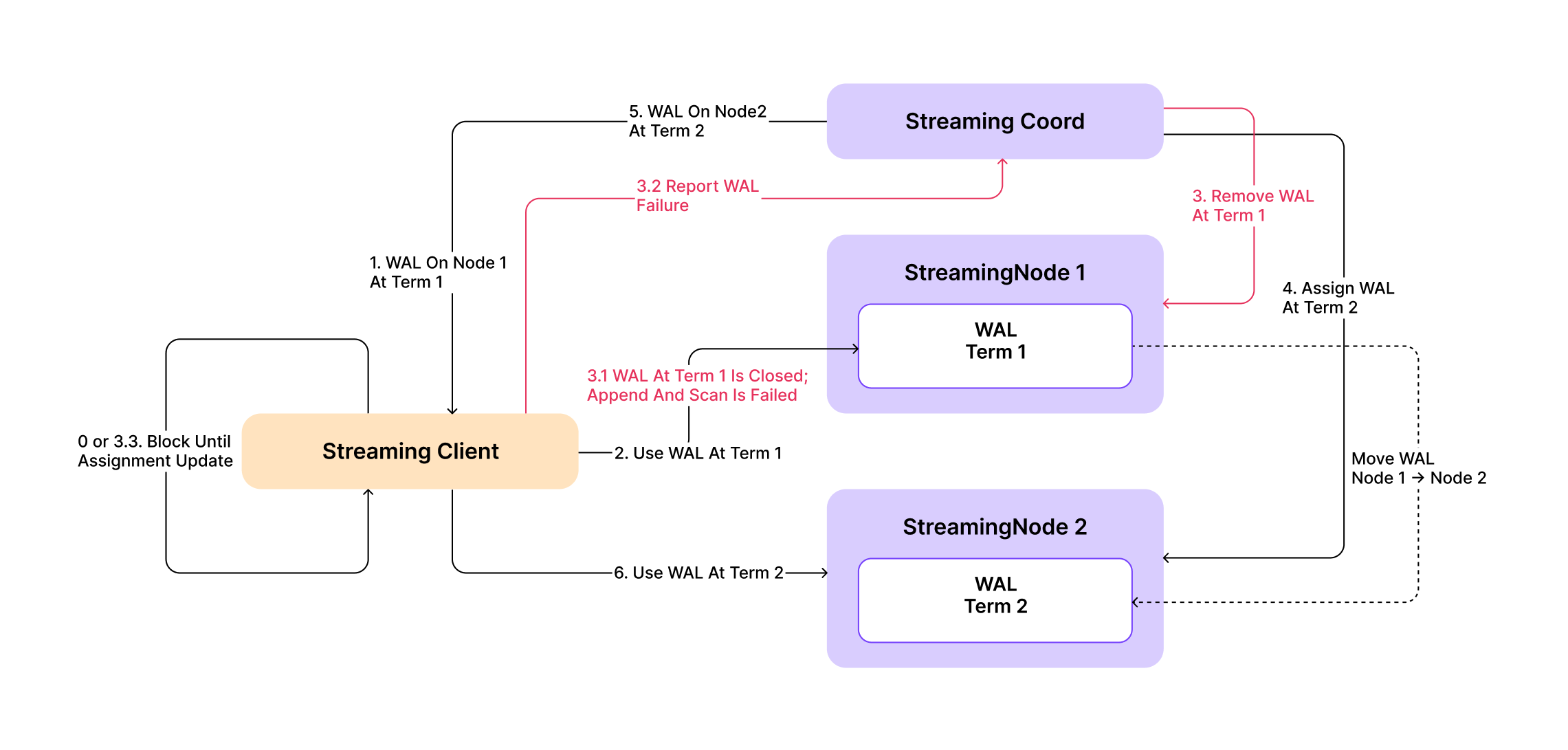

# WAL Lifetime and Wait for Ready

By separating computing nodes from storage, Milvus can easily transfer WAL from one streaming node to another, achieving high availability in streaming service.

# Wait for Ready

When wal is going to move to new streaming node, the client will find that old streaming node reject some requests. Meanwhile, the WAL will be recovered at new streaming node, the client will wait for the wal on new streaming node ready to serve.

# Data Processing

This article provides a detailed describtion of the implementation of data insertion, index building, and data query in Milvus.

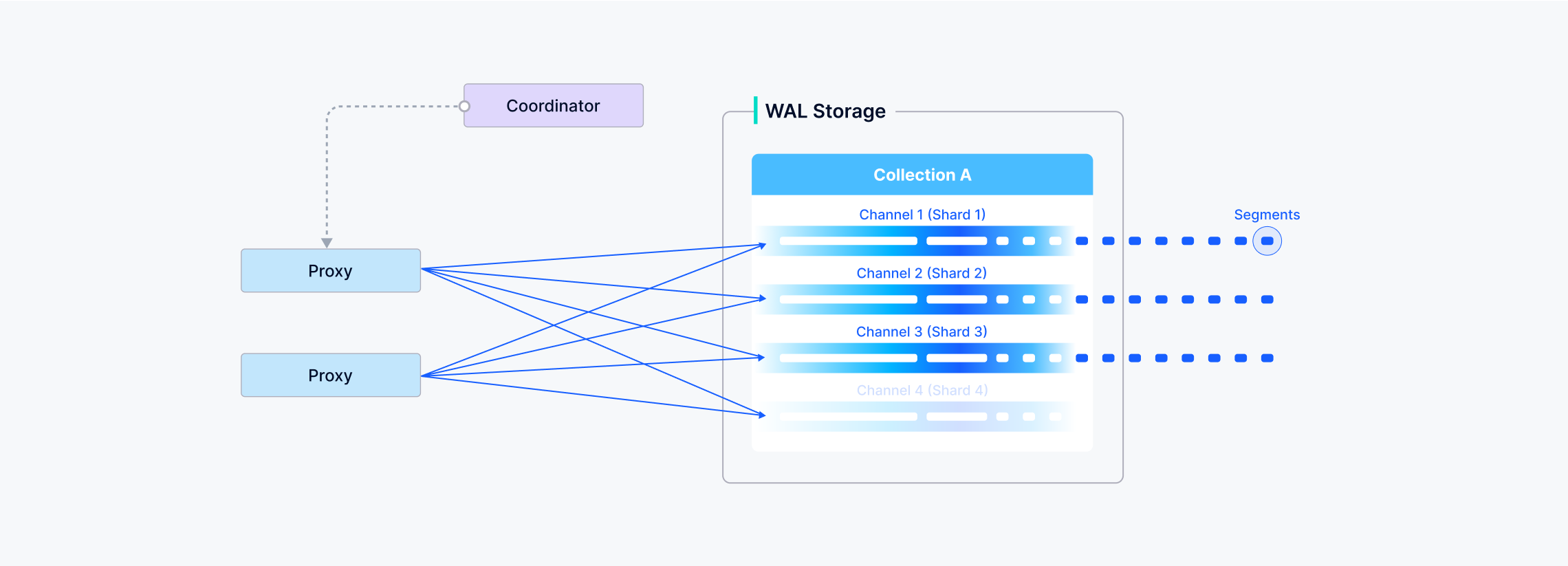

# Data insertion

You can choose how many shards a collection uses in Milvus -- each shard maps to a virtual channel (vchannel). As illustrated below, Milvus then assigns every vchannel to a physical channel (pchannel), and each pchannel is bound to a specific Streaming Node.

After data verification, the proxy will split the written message into various data package of shards according to the specified shard routing rules.

Then the written data of one shard (vchannel) is sent to the corresponding Streaming Node of pchannel.

The Streaming Node assigns a Timestamp Oracle (TSO) to each data packet to establish a total ordering of operations. It performs consistency checks on the payload before writing it into the underlying write-ahead log (WAL). Once data is durably committed to the WAL, it's guaranteed not to be lost -- even in the event of a crash, the Streaming Node can replay the WAL to fully recover all pending operations.

Meanwhle, the StreamingNode also asynchronously chops the committed WAL entries into discrete segments. There are two segment types:

- Growing segment: any data that has not been presisted into the object storage.

- Sealed segment: all data has been persisted into the object storage, the data of sealed segment is immutable.

The transition of a growing segment into a sealed segment is called a flush. The Streaming Node triggers a flush as sonn as it has ingested and written all available WAL entries for that segment -- i.e., when there are no more pending records in the underlying write-ahead log -- at which point the segment is finalized and made read-optimized.

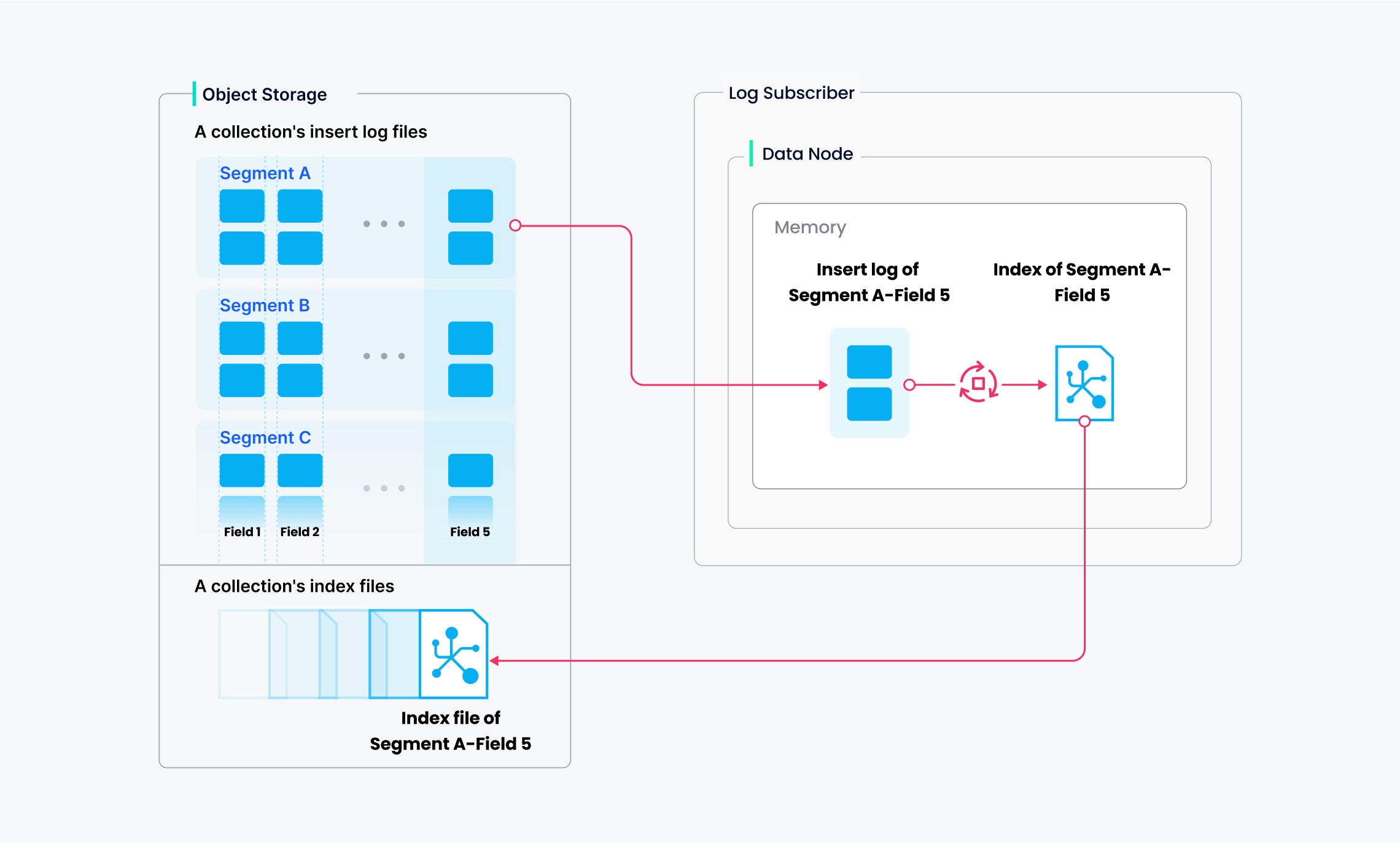

# Index building

Index building is performed by data node. To avoid frequent index building for data updates, a collection in Milvus is divided further into segments, each with its own index.

Milvus supports building index for each vector field, scalar field and primary field. Both the input and output of index building engage with object storage: The data node loads the log snapshots to index from a segment (which is in object storage) to memory, deserializes the corresponding data and metadata to build index, serializes the index when index building completes, and writes it back to object storage.

Index building mainly involves vector and matrix operations and hence is computation -- and memory-intensive. Vectors cannot be efficiently indexed with traditional tree-based indexes due to their high-dimensional nature, but can be indexed with techniques that are more mature in this subject, such as cluster - or graph-based indexes. Regardless its type, building index involves massive iterative calculations for large-scale vectors, such as Kmeans or graph traverse.

Unlike indexing for scalar data, building vector index has to take full advantage of SIMD (single instruction, multiple data) acceleration. Milvus has innate support for SIMD instruction sets, e.g., SSE, AVX2, and AVX512. Given the "hiccup" and resource-intensive nature of vector index building, elasticity becomes crucially important to Milvs in economical terms. Future Milvus releases will further explorations in heterogeneous computing and serverless computation to bring down the related costs.

Besides, Milvus also supports scalar filtering and primary filed query. It has inbuilt indexes to improve query efficiency, e.g., Bloom filter indexes, hash indexes, tree-based indexes, and inverted indexes, and plans to introduce more external indexes, e.g., bitmap indexes and rough indexes.

# Data query

Data query refers to the process of searching a specified collection for k number of vectors nearest to a target vector or for all vectors within a specified distance range to the vector. Vectors are returned together with their corresponding primary key and fields.

A collection in Milvus is split into multiple segments; the Streaming Node loads growing segments and maintains realtime data, while the Query Nodes load sealed segments.

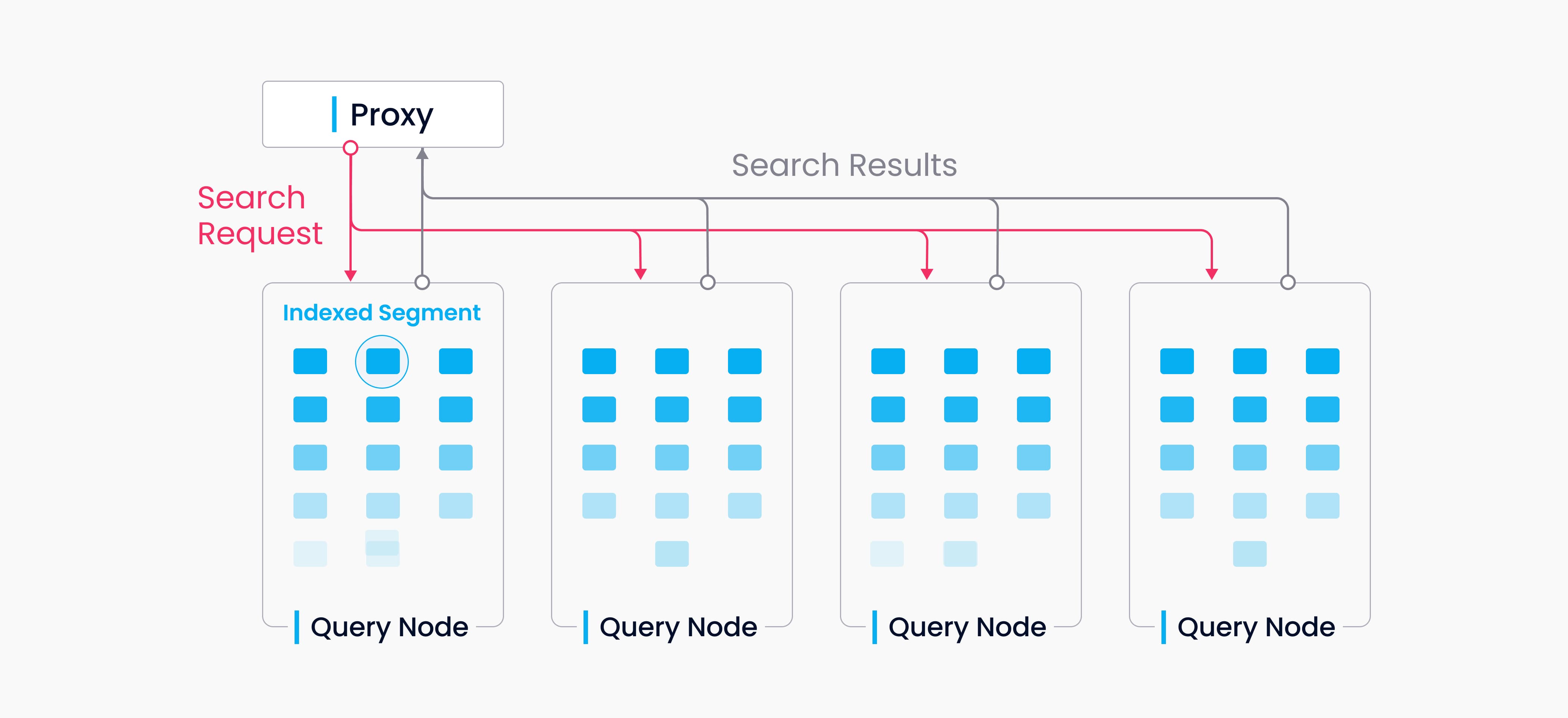

When a query/search request arrives, the proxy broadcasts the request to all Streaming Nodes responsible for the related shards for concurrent search.

When a query request arrives, the proxy concurrently requests the Streaming Nodes that hold the corresponding shards to execute the search.

Each Streaming Node generate a query plan, searches its local growing data, and simultaneously contacts remote Query Nodes to retrieve historical results, then aggregates these into a single shard result.

Finally, the proxy collects all shard results, merges them into the final outcome, and returns it to the client.

When the growing segment on a Streaming Node is flushed into a sealed segment -- or when a Data Node completes a compaction -- the Coordinator initiates a handoff operation to convert that growing data into historical data. The Coordinator then evenly distributes the sealed segments across all Query Nodes, balancing memory usage, CPU overhead, and segment count, and releases any redundant segment.